Every vendor onboarding, payment release, contract exception, and access request must be reviewed, approved, documented, and later defended in regulated organizations.

On paper, this sounds manageable. In reality, it’s where speed goes to die and risk quietly builds up.

You’re under pressure from two sides. Business teams want faster decisions to keep revenue moving. Risk, legal, and compliance teams need tighter controls, clearer accountability, and defensible audit trails.

According to reports, 59% of security leaders say that their organizations have multiple systems that must adhere to compliance requirements. Meanwhile, regulators continue to raise expectations around explainability and governance.

This tension is why AI for approvals automation is gaining traction across finance, legal, compliance, and risk teams.

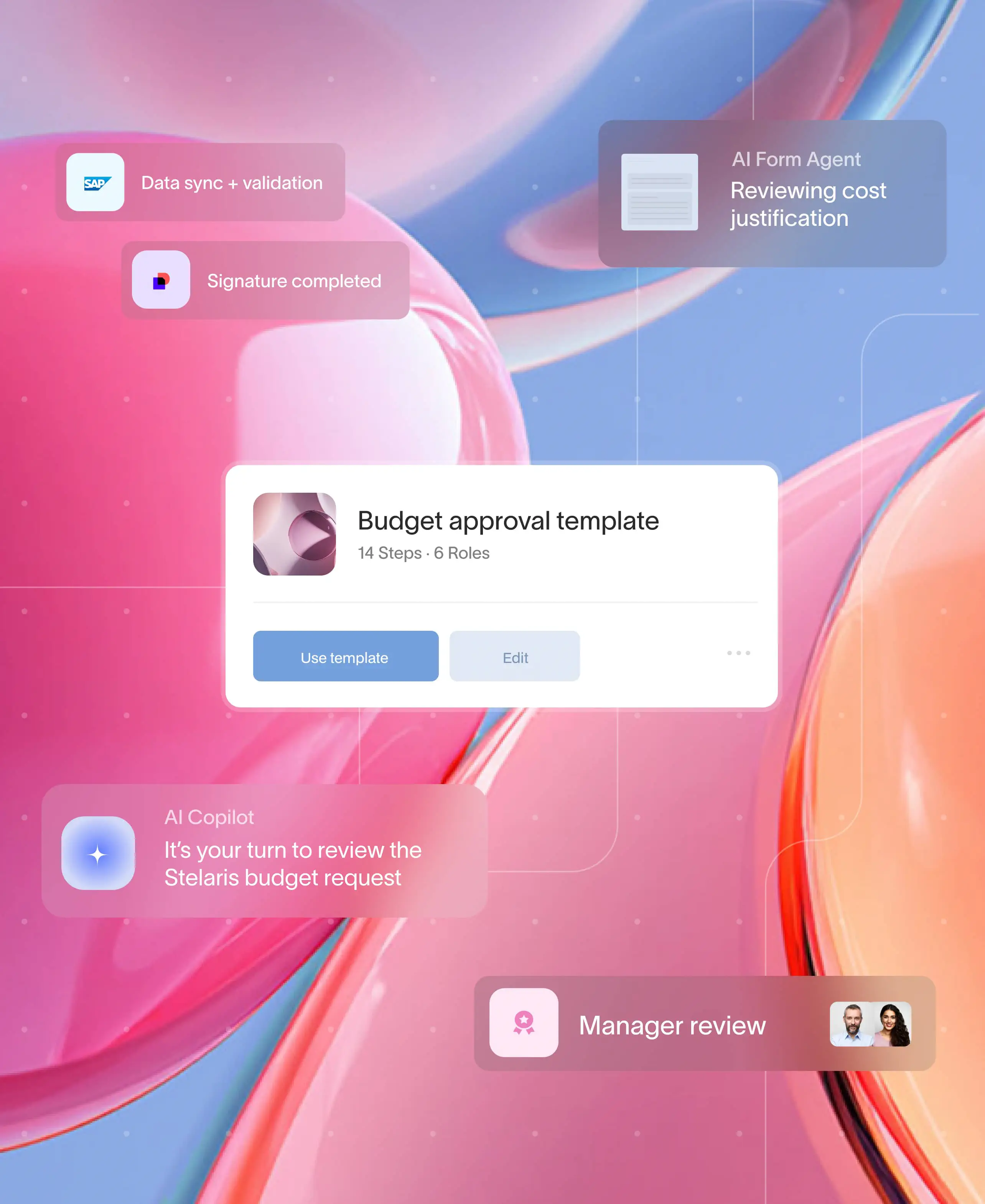

Let’s learn how AI-assisted approvals, enabled by Moxo's human+AI orchestration platform, are changing how approvals work at scale.

Key takeaways

- AI for approvals automation accelerates low-risk decisions while ensuring high-risk and uncertain approvals receive structured human oversight.

- Human-in-the-loop approvals are essential for managing context, preventing bias and model drift, and meeting rising regulatory expectations around decision accountability.

- Auditable workflows with built-in logs are critical for compliance, enabling organizations to prove who approved what, when, and why without relying on emails.

- Moxo acts as a control layer that orchestrates AI risk scoring and human intervention in a single, secure workflow, delivering faster approvals without compromising compliance.

Why approval workflows are a bottleneck in regulated organizations

Approval workflows were never designed for today’s volume, complexity, or regulatory scrutiny. Most systems still rely on tools and habits built for a much simpler risk environment.

As organizations grow, approvals don’t just increase in number. They multiply in complexity, stakeholders, and consequences. Without redesign, these workflows quickly become operational choke points.

Manual reviews, email chains, and spreadsheet trackers

Many approval processes still live in inboxes and shared spreadsheets. However, emails get buried. Spreadsheets go out of sync. Context disappears. When auditors ask who approved what and why, teams scramble to reconstruct decisions after the fact.

Most approval systems fail in the same ways.

They rely on:

- Email chains with no shared context

- Spreadsheets that drift out of sync

- Informal “looks good” approvals

- Manual escalation when something feels risky

The risk is not that approvals are slow. The risk is opacity.

Approval fatigue and inconsistent decision-making

When approvers face dozens or hundreds of similar requests, fatigue sets in. The IAPP highlights “decision fatigue” as a growing compliance risk, noting that repetitive approvals often lead to rubber-stamping rather than thoughtful review.

Two identical requests can receive different outcomes depending on timing, workload, or who happens to be online. That inconsistency becomes a liability during audits and investigations.

Growing regulatory pressure on risk management and compliance teams

Regulators now expect not just decisions, but explanations. GDPR, SOX, SOC 2, and industry-specific regulations increasingly require proof of oversight, escalation logic, and human accountability.

According to Gartner, by 2026, 70% of organizations will be required to demonstrate explainability in automated decision-making, especially where financial or legal risk is involved.

Why simply “automating approvals” is not enough

Basic automation can speed up approvals, but speed without judgment is dangerous. Straight-through processing with no human oversight can amplify errors, embed bias, and create systemic risk.

What you need is not blind automation, but intelligent orchestration. That’s the shift toward AI-assisted approvals with structured human control.

What AI for approvals automation really means

AI for approvals automation is often misunderstood as letting machines make final decisions. In reality, the most effective systems treat AI as a decision support layer, not an authority.

When designed correctly, AI helps you prioritize attention, reduce noise, and apply human judgment where it adds the most value.

AI as a decision support layer, not a final authority

AI analyzes patterns, flags anomalies, and predicts risk. Humans remain accountable for outcomes. This approach aligns with regulatory guidance from bodies like the FTC and EU regulators, who emphasize “meaningful human oversight” in automated decisions.

You’re not removing humans from the loop. You’re making their time count.

Common AI approval scenarios across teams

AI for approvals automation works especially well in repeatable, rules-driven workflows, such as:

- Customer or vendor onboarding

- Expense reimbursements and payments

- Contract approvals and exceptions

- System access and privilege requests

Instead of treating every request the same, AI triages them. Low-risk requests move quickly. High-risk ones get attention early.

For example:

- Auto-approve expense reports under $500 that match policy

- Route vendor contracts over $50,000 to legal and finance

- Flag access requests outside normal role patterns for review

This approach reduces bottlenecks without weakening controls.

Why confidence scoring matters

Confidence scoring lets AI express uncertainty. Rather than a binary yes/no, the system assigns a confidence level to each recommendation.

Low confidence doesn’t mean rejection. It means escalation. That distinction is critical for trust and compliance.

How AI for approvals actually works

Here’s how AI for approvals actually works.

Step 1: Gather everything needed to understand the request

Each approval starts by bringing together all relevant inputs in one place. This includes the information entered in forms, the documents attached to the request, the requester's identity and role, and signals such as when the request was submitted and how often similar requests occur.

Instead of looking at a single data point, the system builds context so the request can be evaluated within a broader operational and risk picture.

Step 2: Compare the request against past decisions and outcomes

The next step involves looking backwards before moving forward.

Here, previous approvals, rejections, overrides, and their real-world outcomes are analyzed to understand what has historically gone wrong and what has proven safe.

When reviewers have overridden automated recommendations in the past, those decisions are treated as valuable guidance. Over time, this helps align risk assessment with how people actually think and decide, not just how rules are written.

Step 3: Check the request against policy and compliance requirements

Organizational policies and regulatory obligations act as non-negotiable guardrails. Financial limits, approval hierarchies, contract thresholds, and compliance rules are applied consistently at this stage.

Requests that cross these boundaries are flagged automatically, ensuring that speed never comes at the expense of governance or regulatory exposure.

Step 4: Assess risk and uncertainty in a way people can understand

All signals, historical patterns, and policy checks are combined into a clear risk assessment.

Instead of exposing complex models or probabilities, the outcome is expressed in practical terms such as low, medium, or high risk, along with an explanation of what contributed to that assessment.

This makes the decision logic transparent, defensible, and easy for reviewers to trust.

Step 5: Move the request forward based on its risk profile

Low-risk requests that closely match policy and past safe outcomes move ahead automatically without waiting in approval queues.

Requests that show uncertainty or higher risk are routed to the right reviewers with full context and supporting information. This ensures that human attention is focused on decisions where judgment, nuance, and accountability actually matter.

Step 6: Learn continuously from human decisions

Every approval, rejection, and override feeds back into the system. Patterns in human judgment help refine how risk is assessed over time, reduce blind spots, and prevent outdated assumptions from driving decisions.

This feedback loop keeps the process aligned with changing policies, business conditions, and real operational risk.

Moxo is a business process orchestration platform designed to manage complex, multi-party workflows that involve documents, approvals, and ongoing collaboration.

Moxo provides the orchestration layer that ties all of these steps together without adding friction.

You can design structured approval flows that combine document collection, automated checks, and human review in a single, guided experience.

Because all activity happens inside secure client workflows, every decision is traceable and auditable by default.

External participants can submit documents or respond to requests via secure links without accounts, and internal teams can see exactly where each approval stands.

The result is faster decisions, cleaner handoffs, and human judgment applied precisely where it protects the business.

Why human override is essential in AI-powered approvals

AI can accelerate approvals and improve consistency, but real-world decisions still depend on human judgment, especially where risk, compliance, and accountability are involved.

- Human override is not a backup: AI works on patterns and probabilities. It cannot grasp intent, consequences, or evolving business realities. Regulators now expect visible human involvement in high-risk automated decisions.

- Risk management requires judgment: Approvers balance trade-offs like speed versus caution and policy versus continuity. Humans can adapt when strict enforcement would cause unintended harm.

- Many approvals require context beyond data: Strategic partnerships, operational incidents, or new regulations often exist outside structured datasets. Humans add situational awareness where AI sees only inputs.

- Human review prevents systemic errors and edge-case failures: Automated decisions can be technically correct but practically damaging. Human intervention stops isolated edge cases from becoming repeatable failures.

- Overrides help detect model drift and hidden bias: As conditions change, models trained on past data lose accuracy. Human overrides act as feedback, helping teams recalibrate risk logic and update policies.

- Trust grows when humans stay in control: Adoption improves when AI recommendations are explainable and challengeable. When AI supports decisions rather than dictates them, teams engage rather than resist.

Moxo is built to support this exact balance between automation and human control.

It allows you to define clear override points in approval workflows, so high-risk or uncertain decisions are automatically routed to the right people with full context and documentation.

Every override is logged and explainable, creating a defensible audit trail while ensuring AI accelerates decisions without sidelining human judgment.

Common scenarios requiring human intervention

Not all approvals are created equal. Certain scenarios consistently demand human involvement, regardless of how advanced the AI model is.

Low-confidence AI scores

Uncertainty should never default to automation. When AI confidence is low, the correct response is escalation, not acceleration. Human review ensures that ambiguous cases are handled thoughtfully rather than rushed.

High-value or high-impact approvals

Large financial transactions, sensitive contracts, or privileged system access carry consequences that extend beyond immediate outcomes. Even if AI signals low risk, these decisions warrant human accountability due to their potential impact.

Policy exceptions and new risk patterns

Exceptions are where learning happens. When requests fall outside established policies or reveal new risk patterns, humans play a critical role in interpreting intent, updating rules, and guiding future automation and workflow management.

In AI for approvals automation, human override is not a sign of failure. It is the mechanism that ensures speed, safety, and accountability can coexist.

Guardrails that keep AI approvals compliant and controlled

AI for approvals automation delivers speed, but speed without structure creates risk.

Guardrails define how far AI can go, when it must stop, and how humans remain accountable for outcomes. In regulated organizations, these guardrails are not optional.

- They are the foundation that keeps automation defensible, auditable, and aligned with regulatory expectations.

- Well-designed guardrails ensure that AI accelerates decision-making without becoming an unchecked authority.

- They clarify responsibility, reduce ambiguity, and protect your organization from compliance failures that stem from over-automation.

- Clear thresholds are the first and most important control in any AI-assisted approval system. They determine which decisions AI can handle autonomously and which ones must be escalated for human review.

- Thresholds may be based on monetary value, risk score, data completeness, or regulatory sensitivity. For example, routine expense approvals under a defined amount may proceed automatically, while payments exceeding that threshold are escalated.

- Contracts with standard terms may move quickly, while deviations trigger review.

How Moxo enables fast, auditable human intervention

Moxo’s solutions are designed to act as the control layer between AI-driven decision intelligence and human accountability.

Instead of forcing teams to choose between speed and oversight, Moxo brings both into a single, secure workflow where AI recommendations and human actions are coordinated, traceable, and compliant by design.

In AI for approvals automation, the biggest risk isn’t automation itself. It’s fragmentation. When AI insights, approvals, and audit evidence live in different systems, accountability breaks down. Moxo solves this by orchestrating every decision and override in one place.

As a G2 reviewer says,

“I love the ability to track all steps of a task and keep information secure and organized. This provides peace of mind knowing sensitive data is safe and accessible. I also appreciate the organized file management, which keeps essential information in one place securely.”

Orchestrating AI decisions and human overrides in one workflow

Moxo connects AI-generated risk scores directly to structured approval workflows. When AI identifies a request as low risk, the workflow can progress automatically. When risk or uncertainty increases, Moxo routes the case to the right human reviewer without breaking the flow.

This orchestration ensures that AI never operates in isolation. Every recommendation is tied to a defined approval path, escalation rule, and accountability structure. Human overrides are not side conversations or manual workarounds. They are first-class actions within the workflow.

Secure, role-based access for approvers

In regulated environments, who sees a decision matters as much as how it is made. Moxo enforces role-based access controls so that only authorized individuals can review, override, or approve high-risk requests.

Legal teams see legal issues.

Finance reviews financial exposure. Compliance retains oversight without becoming a bottleneck. This precision reduces noise for approvers while strengthening internal controls and separation of duties.

Structured approval actions instead of email approvals

Email approvals are fast but also opaque, inconsistent, and nearly impossible to audit at scale. Moxo replaces inbox-based decisions with structured approval actions that require explicit, traceable responses.

Approvers don’t just click “approved.” They choose defined actions, add rationale, attach documentation, and acknowledge risk when necessary. This structure improves decision quality while ensuring every action is recorded in a consistent, auditable format.

Built-in audit trails for compliance and risk teams

Every action taken in Moxo is automatically logged. Approvals, overrides, comments, timestamps, and supporting documents are captured automatically.

For compliance and risk teams, this means audit readiness is continuous. Logs are searchable, exportable, and aligned with regulatory expectations. Instead of reconstructing decisions after the fact, teams can demonstrate oversight in real time.

Using Moxo when AI confidence is low

Low AI confidence is not a failure state. It’s a signal that human judgment is required.

Routing cases for rapid human review

When AI confidence drops below a defined threshold, Moxo immediately escalates the request to the appropriate reviewer with full context. The approver can see the AI’s assessment, risk indicators, and supporting data in one place, enabling faster, more informed decisions.

Maintaining speed without bypassing controls

Even during escalation, Moxo preserves momentum. Approvals move quickly because context is centralized and decision paths are clear. You avoid delays without compromising accountability, which is essential for safely scaling AI for approvals automation.

Balance speed, risk, and accountability with Moxo

AI is changing approvals, but not by removing humans. It’s changing them by making human judgment more targeted, timely, and defensible.

AI for approvals automation works best when it assumes uncertainty, designs for intervention, and documents decisions by default. Human-in-the-loop approvals are quickly becoming a compliance expectation, not a future trend.

With Moxo, you position your organization to move faster without losing control. You accelerate decisions while strengthening trust, accountability, and audit readiness. In today’s regulatory environment, that balance isn’t optional. It’s the new standard.

Get started with Moxo today to get complete audit trails now.

FAQs

1. What is AI for approvals automation?

AI for approvals automation uses machine learning to assess risk, prioritize requests, and route approvals intelligently. It accelerates low-risk decisions while ensuring high-risk or uncertain cases receive structured human review and oversight.

2. Does AI for approvals replace human decision-making?

No. AI supports decision-making by analyzing data and flagging risk, but humans remain accountable. Platforms like Moxo enable human override, ensuring judgment, context, and compliance remain central to every approval.

3. How does Moxo ensure approvals remain auditable?

Moxo automatically records every approval action, override, comment, and timestamp within structured workflows. These built-in audit trails make it easy to demonstrate accountability, explain decisions, and meet regulatory requirements.

4. What types of approvals benefit most from AI-assisted workflows?

High-volume, repeatable approvals such as expense claims, vendor onboarding, contract reviews, payments, and access requests benefit most. AI accelerates routine cases while escalating exceptions and high-risk decisions to human reviewers.

5. How do human overrides improve AI approval accuracy over time?

Human overrides provide feedback that helps refine risk models and thresholds. By learning from real-world decisions, AI systems become more accurate, reduce bias, and adapt to evolving policies and risk patterns.