AI automation does not fail at launch. It fails quietly in production.

Most teams put enormous effort into building and deploying AI-driven workflows. Once those workflows go live, attention shifts elsewhere. The assumption is that automation will continue to operate as designed.

In reality, this is when the real work begins.

Models drift. Exceptions increase. Approvals slow down. Informal workarounds appear. Trust erodes, even if the underlying technology is sound. Industry data consistently shows that most AI initiatives stall after early deployment because organizations lack the operational discipline to sustain them.

According to Forbes, over 90% of AI projects fail to move beyond pilot or early deployment because organizations lack operational governance and monitoring structures.

Managed AI automation services exist to close this gap. They apply a lifecycle mindset - run, monitor, and improve - so AI remains a reliable execution capability rather than a one-time project.

The answer is a lifecycle mindset: run, monitor, and improve. With Moxo acting as the orchestration and control layer, you bring structure, accountability, and visibility to AI-led workflows. Let’s learn how it helps you manage real business value in this guide.

Key takeaways

- AI automation requires ongoing operational ownership. Deployment is the start, not the finish.

- Run, monitor, and improve is a lifecycle, not a service tier. Each phase reinforces reliability and trust.

- Human-in-the-loop is essential in production. It preserves accountability without slowing execution.

- Orchestration enables sustainability. Without a control layer, AI workflows fragment over time.

Why project managers need managed AI automation services

As a Project Manager, you already know the difference between delivering a project and running an operation. Delivery success is hitting scope, timelines, and budget. Operational success is whether the system works reliably six months later under real-world pressure.

Post-launch AI risks are rarely technical failures. They’re operational failures. Workflows behave unpredictably, stakeholders override systems informally, and exceptions bypass controls.

McKinsey reports that fewer than 30% of organizations report that their CEOs sponsor AI agendas directly, largely due to breakdowns in post-deployment ownership and governance.

You’re also balancing a difficult tension. The business wants continuous improvement and faster decisions, while compliance teams demand stability, traceability, and control. Without managed AI automation services, these goals directly conflict.

Ownership doesn’t stop at go-live. In fact, that’s when it truly begins. You’re responsible for ensuring AI-driven workflows remain reliable, auditable, and aligned with business goals.

A structured run–monitor–improve model gives you the framework to do exactly that, without slowing the organization down.

Phase 1 – run: Operating AI automation reliably

Running AI automation is about ensuring day-to-day stability under real operating conditions. This phase determines whether automation becomes a dependable system or a constant source of escalations.

Ensuring day-to-day workflow stability

At runtime, AI rarely operates alone. It interacts with systems, people, and policies. Stability comes from orchestrating these moving parts into a single, predictable flow. When AI outputs trigger downstream actions, humans must know when to step in, validate, or override decisions.

Exception handling is critical here. Several automated workflows encounter exceptions in regulated environments. Without structured handling, teams fall back to emails, spreadsheets, or side conversations, breaking the workflow entirely.

Reliable AI operations ensure exceptions are absorbed into the process rather than pushed outside it.

Managing approvals and human intervention

Human-in-the-loop isn’t a weakness of AI; it’s a requirement for trust. The challenge is ensuring human intervention doesn’t become a bottleneck. Clear escalation paths define who approves what, under which conditions, and within what timeframe.

Without this clarity, decision latency increases. Poorly designed approval chains can add additional cycle time to automated processes. Managed AI automation services ensure AI assists decisions while humans retain authority, without stalling operations.

Maintaining stakeholder visibility

Operational transparency is non-negotiable. Business teams need to see progress, compliance teams need traceability, and leadership needs confidence. When visibility is fragmented across tools, trust disappears. A stable run phase ensures every stakeholder sees the same workflow state in real time.

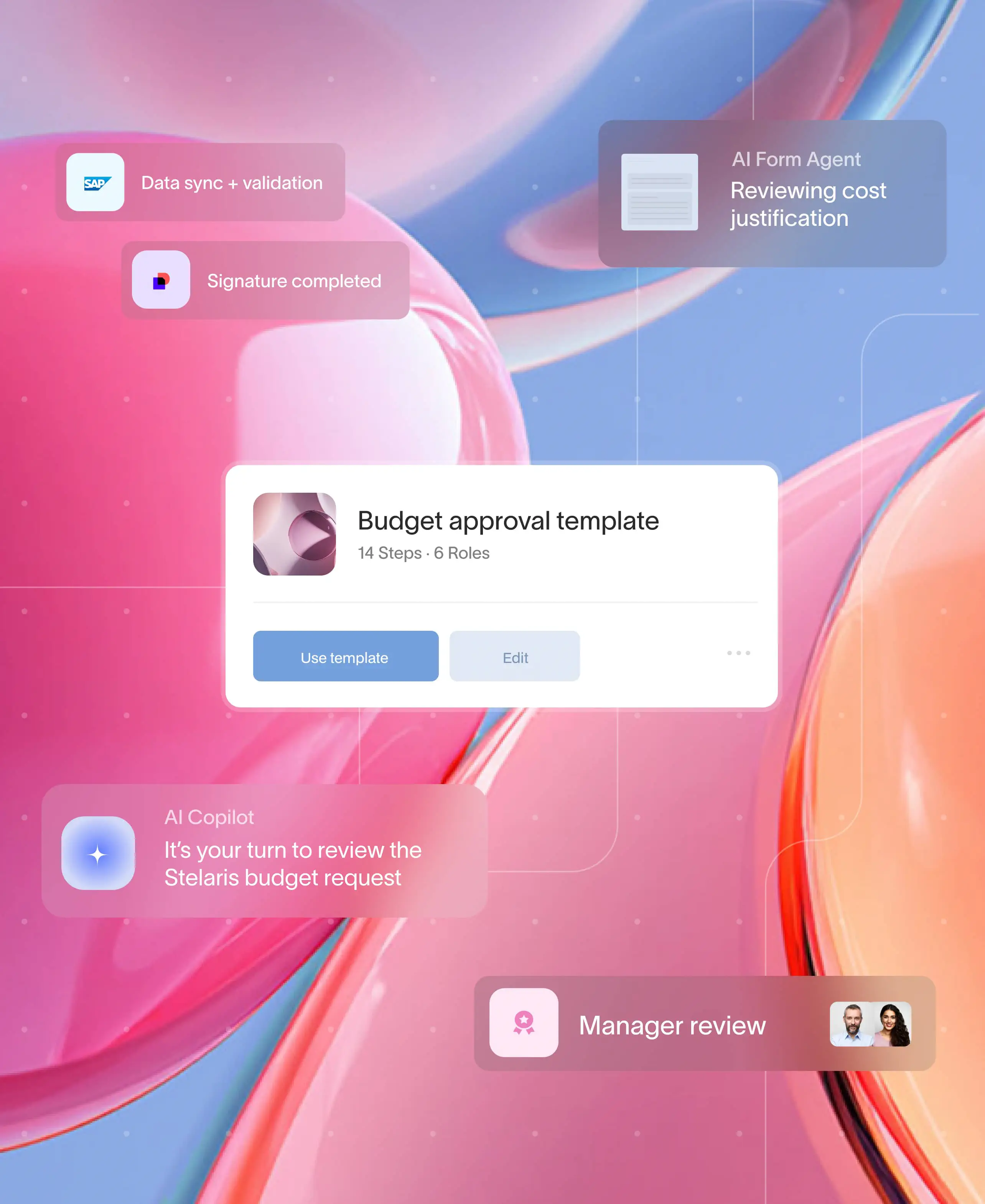

How Moxo supports controlled AI execution

Moxo is a platform for process orchestration in business operations.

It coordinates AI actions, human decisions, and system steps inside structured workflows. AI prepares and flags. Humans approve and resolve. The workflow advances without manual chasing.

This consistency turns automation into infrastructure. The same approved logic executes every time, reducing operational noise and firefighting without slowing teams down.

Consistency is what turns automation into infrastructure. When workflows execute the same approved logic every time, operational noise drops. Firefighting reduces.

Organizations with structured automation governance, like predictive deployment approaches, see up to 35% fewer production incidents. Moxo enables that control without introducing friction.

As a G2 reviewer says,

“I love the ability to track all steps of a task and keep information secure and organized. This provides peace of mind knowing sensitive data is safe and accessible. I also appreciate the organized file management, which keeps essential information in one place securely.”

Phase 2 – monitor: Maintaining performance and trust

Monitoring is where most AI initiatives fail silently. Systems appear functional, but value slowly erodes. Managed AI automation services surface problems before they become failures.

Tracking workflow and AI performance

Performance monitoring isn’t just about uptime. It’s about cycle times, exception frequency, and human intervention rates. When approval steps increase or exceptions spike, it often signals upstream issues, such as data quality problems or unclear policies.

Early detection matters. Organizations that actively monitor AI workflows detect performance degradation faster than those relying on periodic reviews. Monitoring lets you intervene while issues are still manageable.

Monitoring compliance and audit readiness

Audits shouldn’t be disruptive events. Continuous audit trails ensure that every AI action and human decision is automatically recorded. This is far more effective than after-the-fact documentation, which is often incomplete and inconsistent.

Real-time oversight also builds trust. Compliance teams gain confidence when they can observe workflows in real time rather than reconstruct them months later. This reduces friction between delivery teams and governance stakeholders.

Identifying operational risk patterns

Isolated exceptions are manageable. Patterns are dangerous. Repeated overrides, recurring delays, or consistent policy violations indicate structural issues. Monitoring helps you spot these trends early and address root causes before risk compounds.

How Moxo enables real-time monitoring and auditability

Moxo offers live visibility into every workflow state, from AI-triggered actions to human approvals. This transparency removes guesswork and enables proactive management.

End-to-end traceability ensures that every decision, automated or manual, has a clear record. Role-based access allows governance teams to monitor without interfering in execution. This separation of duties is critical in regulated industries.

Most importantly, audits no longer disrupt operations. When evidence is already captured, teams spend less time preparing and more time improving.

Phase 3 – improve: Optimising AI automation over time

Improvement is where long-term value is unlocked. AI automation should evolve alongside the business, without destabilising operations.

Refining workflows based on real usage

Production data tells you what design workshops never will. Where do approvals slow down? Which steps add little value? By analysing real usage, you can simplify workflows, reduce handoffs, and improve throughput.

Small refinements compound over time. Even a 10% reduction in cycle time can deliver significant ROI at scale, especially in high-volume processes.

Expanding automation safely

Scaling automation doesn’t mean blindly copying pilots. It means expanding incrementally, learning from each deployment. Production insights guide where automation can safely take on more responsibility without increasing risk.

Aligning improvements with business goals

Not every improvement is about speed. Sometimes it’s about reducing risk, lowering costs, or improving customer experience. Managed AI automation services ensure improvements stay aligned with strategic priorities, not just technical possibilities.

How Moxo supports continuous optimization

Moxo.ai enables feedback-driven improvements by making workflow performance visible and actionable. Updates are rolled out in a controlled manner to prevent disruption.

Because changes are orchestrated centrally, regression risk is minimised. Teams can improve confidently, knowing they won’t break downstream processes. This balance of agility and control is what sustains automation at scale.

Key metrics for managed AI automation success

If you don’t measure AI automation in production, you’re guessing. Managed AI automation services succeed or fail based on operational evidence, not deployment claims. Project Managers need metrics that reflect stability, trust, and long-term value—not just task completion.

Operational uptime and SLA adherence

Uptime shows whether AI automation is dependable enough to run core business workflows. Frequent slowdowns or missed SLAs indicate orchestration gaps, not model quality. Operational reliability is the top factor determining whether AI systems are expanded or quietly rolled back after launch.

Exception trends and resolution times

Exceptions are inevitable, but unmanaged exceptions are dangerous. Rising exception volumes or slow resolution times signal process misalignment or unclear escalation paths. Tracking trends helps you distinguish one-off anomalies from systemic design flaws before they impact outcomes.

Human intervention frequency and decision latency

Human-in-the-loop is necessary, but excessive intervention often leads to AI outputs that lack clarity or trust. Measuring how often humans intervene and how long approvals take reveals whether AI is assisting decision-making or creating friction across teams.

Compliance, audit, and override indicators

Audit readiness is not binary. Metrics such as override frequency, undocumented decisions, and traceability gaps indicate whether governance is holding up in real conditions. Organizations with continuous audit visibility can easily reduce regulatory remediation efforts.

Common challenges in managing AI automation post-launch

Most AI automation failures don’t happen at deployment. They happen quietly, weeks or months later, when ownership blurs and operational discipline fades. Managed AI automation services exist specifically to prevent these patterns.

Treating AI automation as “set and forget”

Once workflows go live, teams often assume the system will self-correct. It won’t. Models drift, policies change, and edge cases multiply. Without structured monitoring and improvement, automation degrades even if the underlying technology is sound.

Lack of clear operational ownership

When no one owns AI-driven outcomes post-launch, issues bounce between IT, business teams, and compliance. Unclear accountability is one of the top three reasons AI initiatives fail to scale beyond early success.

Monitoring without action

Dashboards alone don’t create value. Many teams track metrics but lack processes to act on them. Without feedback loops and controlled change mechanisms, insights remain unused and problems persist.

Automating judgment-heavy decisions too early

Rushing AI into high-risk decision-making without sufficient human controls erodes trust fast. Once stakeholders lose confidence, regaining buy-in is extremely difficult, even if the technology improves later.

Partner with Moxo to make AI automation sustainable

AI automation is an operational system that must be run, monitored, and improved continuously. As a Project Manager, you’re uniquely positioned to balance speed with control, innovation with governance.

Orchestration is what makes this balance possible. Moxo provides the backbone that connects AI, people, and processes into a single, manageable system.

When you treat automation as infrastructure rather than an experiment, managed AI automation services become the difference between short-term wins and long-term impact.

Get started with Moxo today to manage automation services efficiently.

FAQs

1. What are managed AI automation services?

Managed AI automation services focus on running, monitoring, and continuously improving AI workflows in production, ensuring reliability, governance, and sustained business outcomes beyond initial deployment.

2. Why is monitoring critical after AI automation goes live?

Without monitoring, AI workflows degrade due to model drift, exceptions, and policy changes. Continuous oversight helps detect risks early and maintain performance, compliance, and stakeholder trust.

3. How do managed AI automation services reduce operational risk?

They provide structured orchestration, human-in-the-loop controls, audit trails, and exception handling, ensuring AI decisions remain transparent, accountable, and aligned with regulatory requirements.

4. What role do Project Managers play in managed AI automation?

Project Managers coordinate stakeholders, track operational metrics, manage exceptions, and ensure AI automation evolves safely without disrupting ongoing business operations.

5. How does Moxo support managed AI automation services?

Moxo acts as an operational control layer, orchestrating AI tools, systems, and human actions while providing real-time visibility, auditability, and controlled optimization across workflows.