The core steps to build an AI onboarding process are: define what success looks like, map your actual process (not the fantasy version), instrument the journey for data, build smart intake forms, add AI-assisted guidance, deploy an onboarding assistant, automate workflow routing, and close the loop with measurement. Skip any of these and you'll have AI that's technically impressive but operationally useless.

Here's why this matters now. Only 12% of employees strongly agree their organization does a great job onboarding, according to Gallup. Meanwhile, strong onboarding improves retention by 82% and productivity by over 70%.

The gap between those numbers is where most companies are bleeding time, money, and people. AI can close that gap, but only if you build it systematically using AI onboarding best practices.

Key takeaways

AI can't optimize what you haven't defined. Start with a single success metric before you touch any technology.

Map reality, not aspiration. Process mining exists because the process in your head is never the process that actually runs.

Human-in-the-loop isn't optional. Fully autonomous onboarding makes a great demo. Auditable onboarding survives procurement.

Step 1: Define onboarding success in one sentence

AI can't "optimize onboarding" if you don't tell it what winning looks like. Before you evaluate any tool, write down one primary outcome: time-to-value, activation rate, time-to-productivity, or whatever metric actually matters for your business. Then add two or three secondary metrics that support it.

This sounds obvious, but most teams skip it. They buy AI tools and then wonder why the results are underwhelming. The tool wasn't the problem. The lack of a target was.

Step 2: Map the actual process (Not the fantasy)

The process in your documentation is not the process that actually runs. Process mining exists specifically to discover and improve real processes using event logs, not assumed flows. Before you automate anything - especially in complex employee onboarding AI workflows - please map what actually happens: intake, verification, setup, training, first value, handoff - if you need a guide to help with that, check this one out.

You'll probably find that your "onboarding process" is actually three different processes depending on who's doing it, with handoffs that exist only in someone's memory.

Step 3: Instrument the journey

You need two types of data: event data (what happened) and reason data (why it happened). Build an event tracking plan that captures every step. Identify drop-off points. Collect reason codes from surveys, chat tags, and call notes.

Track leading indicators like activation, completion rate, and time-to-value rather than waiting months for retention data. By the time retention tells you something's wrong, you've already lost the people you could have saved.

Step 4: Build smart intake

Intake is where most onboarding dies. Visa's research on digital onboarding found that average abandonment happens at 14 minutes and 20 seconds, and at 20 minutes, 70% of users abandon entirely. Your intake form is competing against that clock.

AI helps here through entity extraction, autofill, document classification, and "next best question" logic that asks fewer questions by reusing known data. The goal is adaptive intake that validates as you go, not a static form that collects everything upfront and hopes for the best.

Step 5: Add AI-assisted guidance

Once someone is in your product or process, they need to reach first value fast. AI-assisted in-app guidance creates contextual checklists and walkthroughs that change based on persona, role, stage, and behavior.

This isn't theoretical. Pendo documents AI-assisted guide creation that lets teams build guides using conversational AI. The technology exists. The question is whether you've done steps one through four well enough to know what guidance to build.

Step 6: Deploy an onboarding assistant

An onboarding assistant answers "how do I...?" questions, retrieves policy and documentation, and escalates to humans with context. The key is restricted scope: start with onboarding only, a tight knowledge base, and explicit boundaries on what it can and can't do.

NIST's AI Risk Management Framework positions risk management as core to responsible AI use. For onboarding assistants, that means clear guardrails, human escalation paths, and audit trails from day one.

Step 7: Automate the workflow

This is where most teams want to start, but it's step seven for a reason. Workflow automation means orchestrating across systems (CRM, HRIS, ticketing, storage, e-sign, identity verification) with routing, approvals, and audit trails. Without the earlier steps, you're just automating chaos faster.

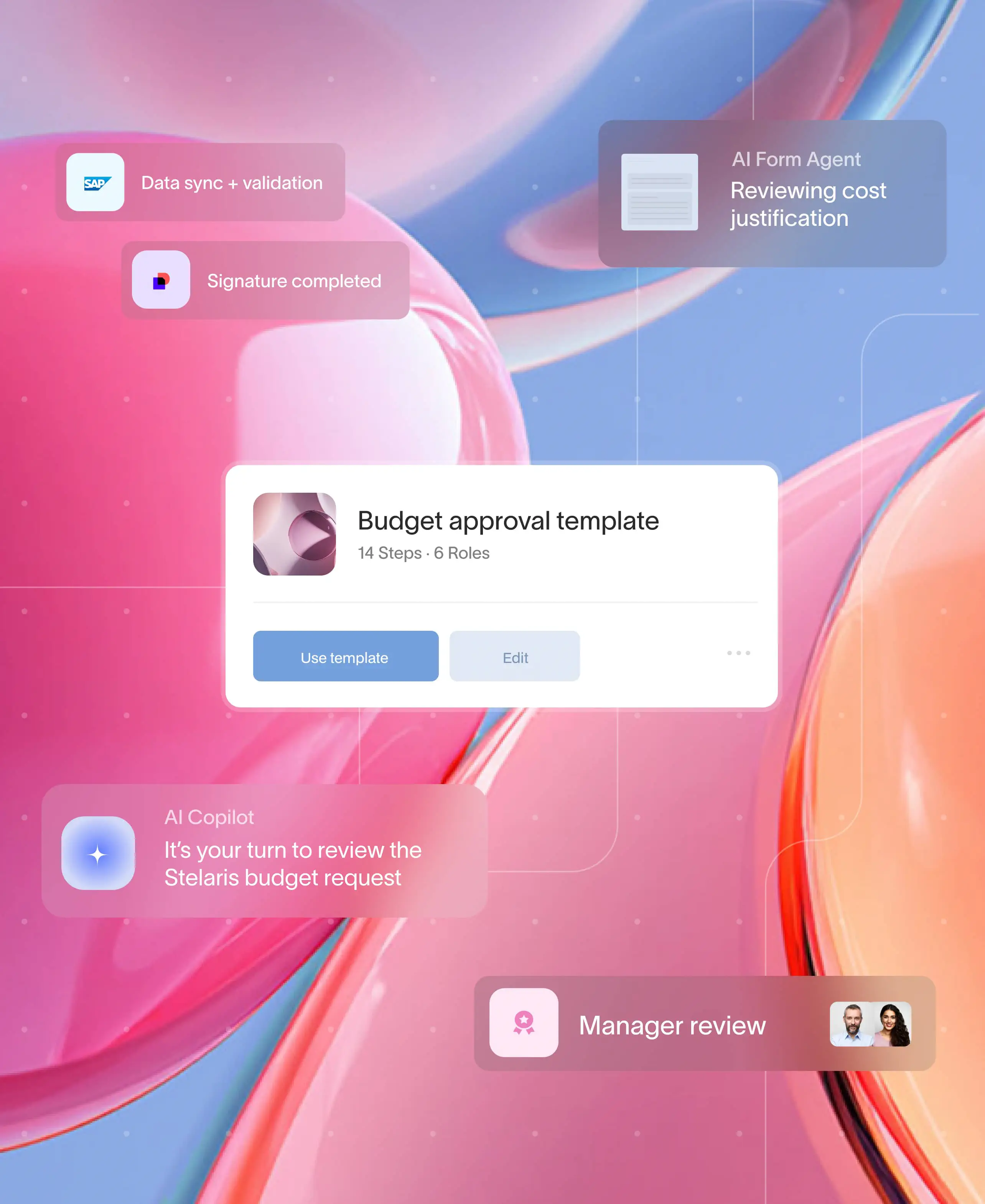

Moxo is a Human + AI Process Orchestration Platform built for exactly this. It models your onboarding workflows around the humans who need to make decisions (approvals, exceptions, compliance) and embeds AI agents to handle everything else: validating submissions, routing tasks, sending reminders, and preparing context.

The Moxo AI Review Agent checks documents for completeness and automatically requests what's missing. The workflow routes to the right people at the right time, regardless of where they sit. Every step is logged. What used to require constant manual coordination now runs itself, with 50-70% fewer SLA misses and up to 80% fewer follow-up emails.

Step 8: Close the loop

Treat onboarding like a product: ship, measure, fix, repeat. Run a weekly review covering bottlenecks, abandonment rates, and other metrics to measure AI onboarding success (view this guide for a full list of metrics). The data you instrumented in step three feeds this loop.

ABBYY's onboarding research found that 90% of organizations experience onboarding abandonment, with leaders estimating that cutting abandonment by 50% could raise acquisition by 29% and revenue by 26%. The opportunity is real. But you only capture it if you measure relentlessly and iterate continuously.

Conclusion

Building an AI onboarding process isn't about buying the right tool. It's about doing the foundational work that makes any tool effective: defining success, mapping reality, instrumenting for data, and building human oversight into the system from the start.

Most teams want to jump to automation. The ones who succeed are the ones who do the boring work first. See how Moxo approaches process orchestration for onboarding through a product walkthrough here.

FAQs

Can I use these steps for customer onboarding and employee onboarding?

Yes. The framework applies to customer, employee, and vendor onboarding. The specific metrics and steps change, but the structure (define success, map reality, instrument, build intake, guide users, assist, automate, measure) works across all three.

What if I don't have event data to work with?

Start collecting it now. Even basic tracking (timestamps on key steps, completion rates, drop-off points) gives you enough to identify bottlenecks. You don't need perfect data to start. You need directionally useful data.

How do I handle compliance and audit requirements?

Build human-in-the-loop review points into your workflow for compliance checks, exceptions, and high-risk decisions. Choose tools that provide audit trails by default. "Fully autonomous" sounds impressive, but "auditable" is what survives procurement and regulatory scrutiny.

What's the biggest mistake teams make when building AI onboarding?

Jumping to automation before doing the foundational work. If you automate a broken process, you just break it faster. Define success, map what actually happens, and instrument for measurement before you touch workflow automation.