Your AI just flagged a client document as incomplete. Now what?

If you're like most operations teams, the answer involves a scramble: someone screenshots the flag, pastes it into Slack, tags three people who might know what to do, and hopes the client doesn't follow up before it's resolved. Meanwhile, there's no record of who handled it, what they decided, or why.

This is the operational gap that most AI conversations ignore. Everyone talks about training models and improving algorithms. Nobody talks about what happens when AI flags something and a human needs to step in. But most of those organizations have no structured way to manage the human review tasks that HITL creates.

For operations and service teams managing client onboarding, document validation, or compliance reviews, this guide covers what human-in-the-loop AI actually means for your workflows, and how to build review systems that scale without creating bottlenecks.

Key takeaways

Human-in-the-loop AI is about operational coordination, not data science. When AI flags exceptions in business processes, operations teams need structured workflows to route those flags, capture decisions, and keep clients informed.

Client-facing AI review is an underserved opportunity. Most HITL content focuses on internal ML training. The real challenge for service businesses is involving clients and external stakeholders in the review process without creating chaos.

Audit trails transform one-off fixes into compliance assets. Every human decision on an AI-flagged item must be logged, justified, and accessible for audits, disputes, or process improvement.

Workflow orchestration makes HITL scalable. Without structured task assignment and collaboration tools, human review becomes the bottleneck that defeats the purpose of automation.

What is human-in-the-loop AI

Human in the loop AI describes any system where humans and AI collaborate rather than AI operating autonomously. The AI produces outputs, humans validate or correct those outputs, and those corrections strengthen future AI behavior. It's the difference between letting AI run unchecked and building a feedback loop where human judgment continuously improves the system.

In a fully automated pipeline, AI makes decisions end-to-end without intervention. A document gets scanned, data gets extracted, and the output goes straight into your system, whether it's accurate or not.

In a HITL workflow, automation handles the predictable work while humans focus on the exceptions that require context, nuance, or regulatory judgment. The human isn't slowing things down; they're catching the errors that would create bigger problems downstream.

Training AI with human feedback is what makes HITL more than a safety net. When a human corrector flags that the AI misread "2025" as "2035" on a contract, that correction can feed back into the system.

Over time, the AI encounters fewer edge cases because it's learned from thousands of human decisions. This is fundamentally different from static automation, which makes the same mistakes forever.

Human-in-the-loop AI vs. autonomous AI: Why your operations team needs both

You'll encounter several terms that overlap with human-in-the-loop AI. Understanding the distinctions helps you choose the right approach for your workflows.

Reinforcement learning from human feedback (RLHF) is how ChatGPT and other large language models improve their responses. Human raters evaluate AI outputs, and those ratings train the model to produce better results. RLHF is a specific technique for model training. HITL is broader, encompassing any workflow where humans review, correct, or validate AI outputs, whether or not those corrections formally retrain a model.

Active learning is a machine learning strategy where the AI identifies which data points would be most valuable for a human to label. Instead of reviewing everything, humans focus on the examples where the model is least confident. Active learning is a subset of HITL, optimized specifically for efficient model training.

Supervised learning uses pre-labeled datasets to train models before deployment. HITL extends this by continuing human involvement after deployment, catching errors in production and enabling continuous improvement.

For operations teams, the technical distinctions matter less than the practical question: how do we manage the human review tasks that AI-assisted processes create?

When AI extracts data from a client-submitted form, it will occasionally misread handwriting or flag missing fields. When AI scans transactions for compliance issues, it generates alerts that require human judgment. When AI pre-fills onboarding documents, errors create client-facing delays. In each case, someone needs to review, decide, and document what happened. That's HITL in practice, and it's fundamentally an operational challenge, not a data science one.

For teams already working on business process automation, HITL represents the practical layer that makes AI-assisted workflows reliable.

The role of HITL in ML model training and validation

Before we get to the operational workflows that matter for service teams, it's worth understanding where HITL comes from. The concept originated in machine learning, where human involvement solves a fundamental problem: AI is only as good as the data it learns from.

Model training depends on high-quality human input. Before an AI can recognize a fraudulent transaction or extract data from a form, humans must label thousands of examples. These annotators identify what "good" looks like: this signature is valid, this field contains a date, this transaction is suspicious. The quality of those labels directly determines the model's accuracy. Garbage labels produce garbage predictions.

Edge cases require human judgment. AI models struggle with ambiguity. A handwritten "7" that looks like a "1." A compliance flag that could be a legitimate business activity or money laundering. A document in an unexpected format. Human annotators provide the nuanced decisions that help models handle these gray areas instead of failing silently.

Validation catches drift before it causes damage. After deployment, AI models encounter real-world data that differs from their training sets. Performance degrades over time as the gap widens. Human reviewers monitoring low-confidence outputs and ambiguous cases catch this drift early, flagging when the model needs recalibration.

HITL AI software traditionally focuses on this training pipeline. Platforms like Labelbox, Scale AI, and Amazon SageMaker Ground Truth provide annotation interfaces, review queues, and workflow tools for data science teams.

They're optimized for labeling efficiency: how many images can annotators classify per hour? How do you manage disagreements between reviewers? How do you route the highest-value items to your best annotators?

This is valuable work. But it's not what operations teams need.

Why HITL matters for operations and service teams

Most human-in-the-loop AI content targets data scientists and ML engineers. It focuses on annotation tools, model training, and algorithm improvement. That's the domain of platforms like Labelbox and Scale AI.

But operations and service teams face a different problem entirely. They're not training models. They're managing the human review tasks that AI-assisted processes create, often involving clients and external stakeholders who expect fast, transparent resolution.

Document review workflows illustrate the gap. When AI validates a client-submitted ID and flags it as potentially expired, someone needs to check it, make a call, and communicate back to the client. Without a structured process, that flag becomes a sticky note, a Slack message, or an email that gets buried. The client waits. The deal stalls.

Compliance checks create audit exposure. AI can scan transactions for potential fraud or regulatory violations, but a human determines whether a flag is a genuine concern or a false positive. That decision needs to be captured with the reviewer's identity, their reasoning, and a timestamp. When auditors ask questions months later, "we handled it" isn't an acceptable answer.

Client onboarding reveals the stakes. When AI pre-fills applications or routes documents, errors delay the entire relationship. Peninsula Visa reduced document processing time by 93% after implementing structured flows that guide clients step-by-step and route exceptions to staff automatically. The AI handles volume; the workflow handles coordination.

The pattern is consistent: AI surfaces exceptions, humans resolve them, and the orchestration layer ensures nothing falls through the cracks while keeping external stakeholders in the loop.

Best practices for HITL review and correction workflows

Building effective human review processes requires more than adding approval steps. Teams need structured approaches that optimize human effort, maintain quality, and keep clients informed.

Confidence-based routing ensures reviewers see only what needs attention. AI systems assign confidence scores to their outputs. Low-confidence items get routed to humans; high-confidence outputs proceed automatically. This prevents reviewer fatigue and keeps throughput high. Without smart routing, teams either review everything (unsustainable) or review nothing (risky).

Context preservation enables accurate decisions. When a reviewer receives a flagged item, they need the full picture: the original input, the AI's interpretation, any related documents, prior decisions on similar cases, and client communication history. Moxo's workflow orchestration provides this rich context through collaborative workspaces, ensuring reviewers never make decisions in a vacuum.

Client visibility reduces friction. When AI flags an issue with a client submission, the client shouldn't wonder what happened. Structured workflows can notify clients automatically, request additional information through the same channel, and keep everyone aligned. This transforms HITL from an internal process into a client-facing capability.

Audit trails create accountability. Every correction, approval, or escalation must be logged with timestamps and reviewer identification. This documentation serves compliance requirements, supports dispute resolution, and reveals patterns that inform process improvement.

Falconi Consulting reduced turnaround times by 40% after implementing automated workflows with role-based task routing, demonstrating how structured HITL processes accelerate rather than slow down operations.

5 essential techniques for human-in-the-loop AI workflows that reduce errors and save time

Building effective human review processes requires more than adding approval steps. Teams need structured approaches that optimize human effort, maintain quality, and keep clients informed.

Confidence-based routing ensures reviewers see only what needs attention. AI systems assign confidence scores to their outputs. Low-confidence items get routed to humans; high-confidence outputs proceed automatically. This prevents reviewer fatigue and keeps throughput high. Without smart routing, teams either review everything (unsustainable) or review nothing (risky).

Context preservation enables accurate decisions. When a reviewer receives a flagged item, they need the full picture: the original input, the AI's interpretation, any related documents, prior decisions on similar cases, and client communication history. Moxo's workflow orchestration provides this rich context through collaborative workspaces, ensuring reviewers never make decisions in a vacuum.

Client visibility reduces friction. When AI flags an issue with a client submission, the client shouldn't wonder what happened. Structured workflows can notify clients automatically, request additional information through the same channel, and keep everyone aligned. This transforms HITL from an internal process into a client-facing capability.

Audit trails create accountability. Every correction, approval, or escalation must be logged with timestamps and reviewer identification. This documentation serves compliance requirements, supports dispute resolution, and reveals patterns that inform process improvement.

Common challenges in human-in-the-loop AI workflows

Even well-designed HITL processes face predictable obstacles. Recognizing these challenges early helps teams build systems that scale.

Scalability concerns arise when exception volumes spike. Human review becomes a bottleneck if routing logic sends too many items for manual handling or if reviewer capacity doesn't match demand. The solution is continuous calibration: adjusting confidence thresholds, refining routing rules, and distributing work across qualified team members. With Moxo, teams can automate task assignment based on roles, priorities, and expertise, preventing any single reviewer from becoming overwhelmed.

Consistency across reviewers requires clear guidelines. When multiple people handle similar cases, their decisions should align. Without documented standards and regular calibration, different reviewers may reach different conclusions on identical inputs, creating compliance risk and client confusion.

Client communication fragments without structure. When AI flags an issue with a client submission, the response often scatters across email, chat, and internal notes. Clients receive inconsistent updates. Staff duplicate effort. A unified workflow keeps client communication in one place, connected to the review task and the audit trail.

Integration complexity slows adoption. HITL workflows require smooth data flow between AI systems, review tools, and client-facing channels. Disconnected tools mean manual handoffs and lost context. Platforms with native third-party integrations eliminate this friction.

Regulatory and compliance aspects

Human-in-the-loop workflows aren't just operationally efficient; they're compliance requirements in many industries. Regulatory frameworks across financial services, healthcare, and government contracting increasingly demand documented human oversight of automated decisions, especially when those decisions affect clients or sensitive data.

Audit trails are the foundation of compliance. When an AI system flags an issue, the response becomes a compliance artifact. Regulators want to see not just the AI's decision but the human review process: who reviewed it, when they reviewed it, what they decided, and why. This documentation protects organizations during audits, disputes, and investigations. Without structured logging, teams create compliance risk by handling reviews through ad hoc channels like email or chat.

Explainability requirements drive the need for HITL processes. Regulations like GDPR and Fair Lending rules require organizations to explain automated decisions to affected parties. HITL workflows create the documentation needed for these explanations. When a customer asks why their application was flagged or rejected, teams can point to a structured review record that shows both AI reasoning and human judgment.

Standardization across reviewers prevents regulatory exposure. When multiple people handle similar decisions, inconsistency becomes a liability. Compliance officers worry about disparate treatment claims when decisions vary based on the reviewer. Structured workflows with clear guidelines, training, and regular calibration sessions ensure consistent decision-making and reduce legal risk.

Client trust grows when processes are transparent. Organizations that can explain their HITL workflows to clients—showing how they combine automation with human expertise—build credibility. This is especially important in industries like underwriting, vendor management, and professional services, where clients want assurance that important decisions receive human attention.

How Moxo supports human review in AI-assisted workflows

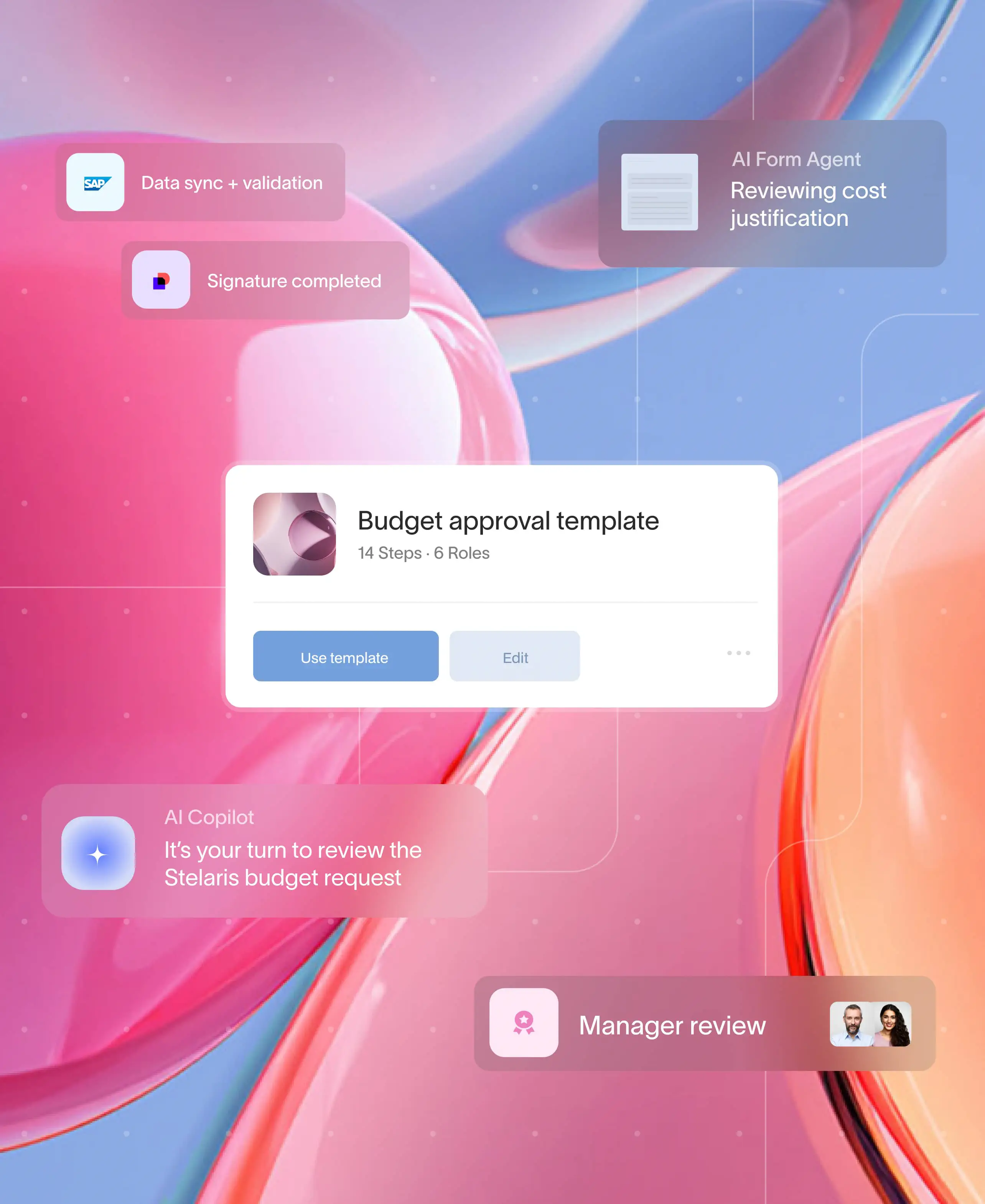

Moxo provides workflow orchestration that operationalizes human review tasks within AI-assisted business processes. Rather than focusing on ML model training, Moxo addresses what operations teams actually need: getting the right tasks to the right people with the right context, while keeping clients informed.

Task routing based on roles and expertise. When AI flags a low-confidence item, whether a document extraction issue, a form validation failure, or a compliance alert, Moxo routes that task to the appropriate reviewer automatically. No manual assignment, no guessing who should handle it.

Full context at every decision point. Reviewers see the complete history: AI outputs, related data, prior actions, client communications, and any notes from previous handlers. This collaboration and client management capability ensures decisions are informed rather than improvised.

Client-facing coordination built in. Unlike internal-only review tools, Moxo keeps clients in the loop. When a document needs correction or additional information is required, clients receive notifications through the same portal where they submitted the original materials. No email chains. No confusion about status.

Comprehensive audit trails for compliance. Every human decision is logged with timestamps, reviewer identification, and the action taken. For regulated industries, this creates the accountability documentation that audits require.

"Moxo streamlines the onboarding process!" notes one G2 reviewer, highlighting how centralized workflows improve clarity and reduce manual work.

The path forward: Implementing HITL workflows that scale without sacrificing oversight

Human-in-the-loop AI is evolving beyond its data science origins. For operations and service teams, the challenge isn't training models. It's managing the review tasks that AI-assisted processes create, coordinating decisions across teams, keeping clients informed, and maintaining the audit trails that compliance requires. Most HITL tools ignore this operational layer entirely, leaving teams to improvise with email, chat, and spreadsheets.

Moxo provides the workflow orchestration that makes HITL practical for business processes. With automated task routing, rich context for reviewers, client-facing coordination, and comprehensive audit trails, Moxo enables scalable human review without bottlenecks.

Get started with Moxo to build AI-assisted workflows that combine automation speed with human precision.

FAQs

What is human-in-the-loop AI?

Human in the loop AI refers to systems where humans and AI collaborate rather than AI operating autonomously. AI handles routine processing, while humans validate outputs, correct errors, and make judgment calls on edge cases. For operations teams, this means managing the review tasks that emerge when AI flags exceptions in business processes.

How does HITL differ for operations teams versus data scientists?

Data scientists use HITL to label training data and improve model accuracy. Operations teams use HITL to manage the review workflows that AI-assisted processes create. The focus shifts from algorithm improvement to task routing, decision capture, audit trails, and client communication.

What are the best practices for designing review workflows?

Effective review workflows use confidence-based routing to send only low-confidence items to humans, preserve full context so reviewers can make informed decisions, keep clients informed of status, and log every correction for audit and improvement purposes.

When should humans intervene versus letting AI run autonomously?

Human intervention is appropriate for low-confidence AI outputs, edge cases without clear precedent, decisions with significant compliance or financial implications, and situations requiring contextual judgment. High-confidence routine tasks can typically proceed automatically.

What software supports effective HITL review workflows?

Effective HITL software for operations teams provides task routing based on roles, context preservation for informed decisions, client-facing coordination, and audit trails for compliance. Platforms like Moxo focus on orchestrating these operational workflows rather than ML model training.

How do teams measure HITL's impact on workflow efficiency?

Key metrics include exception resolution time, reviewer workload distribution, client response time on flagged items, and audit trail completeness. Tracking these metrics reveals where processes need adjustment and demonstrates the operational value of structured HITL workflows.