%20for%20Agentic%20AI_%20Ensuring%20Control%20%26%20Safety.webp)

There's a particular kind of executive panic that happens when someone asks, "Wait, what decision did the AI make and why?" and nobody in the room can answer.

Human-in-the-loop (HITL) AI governance embeds human judgment at critical decision points in agentic workflows so autonomous systems can move fast without becoming black boxes that nobody understands, trusts, or can explain to regulators.

It's the difference between "the AI handles it" and "the AI prepares it, a human decides, and everyone knows who's accountable."

As organizations deploy agentic AI systems that plan, decide, and act autonomously, the balance between automation efficiency and risk management becomes the whole game. HITL is the governance architecture that lets you scale AI-driven execution while keeping humans accountable for decisions that actually matter.

In this guide, you will learn how to use HITL automation to your advantage and ensure control and safety is at the heart of the process.

Key takeaways

HITL is essential governance infrastructure. It ensures accountability, transparency, and alignment with organizational values in autonomous systems.

Agentic AI's "black box" risk is a compliance liability. Opaque decision logic and unpredictable actions make HITL safeguards non-negotiable in regulated environments.

Effective checkpoint design balances autonomy with oversight. The goal is bounded autonomy where agents act on predictable work while humans intervene on exceptions.

Auditability separates "we use AI" from "we govern AI responsibly." Traceable handoffs and documented approvals are what hold up to regulatory scrutiny.

Understanding human-in-the-loop AI governance

HITL governance means AI systems are designed so humans interact at critical decision points, reviewing, approving, or adjusting AI recommendations before final action is taken.

This isn't about distrust. It's about responsibility architecture. In any complex process, there's work that requires human judgment (approvals, exceptions, risk assessments) and work that doesn't (validation, routing, preparation, follow-ups). HITL separates the two so each gets handled by whoever does it best.

In agentic AI systems, where autonomy extends beyond simple prompts into planning and multi-step execution, this distinction becomes critical.

Article 14 of the EU AI Act explicitly requires that high-risk AI systems "be designed and developed in such a way that they can be effectively overseen by natural persons during the period in which they are in use."

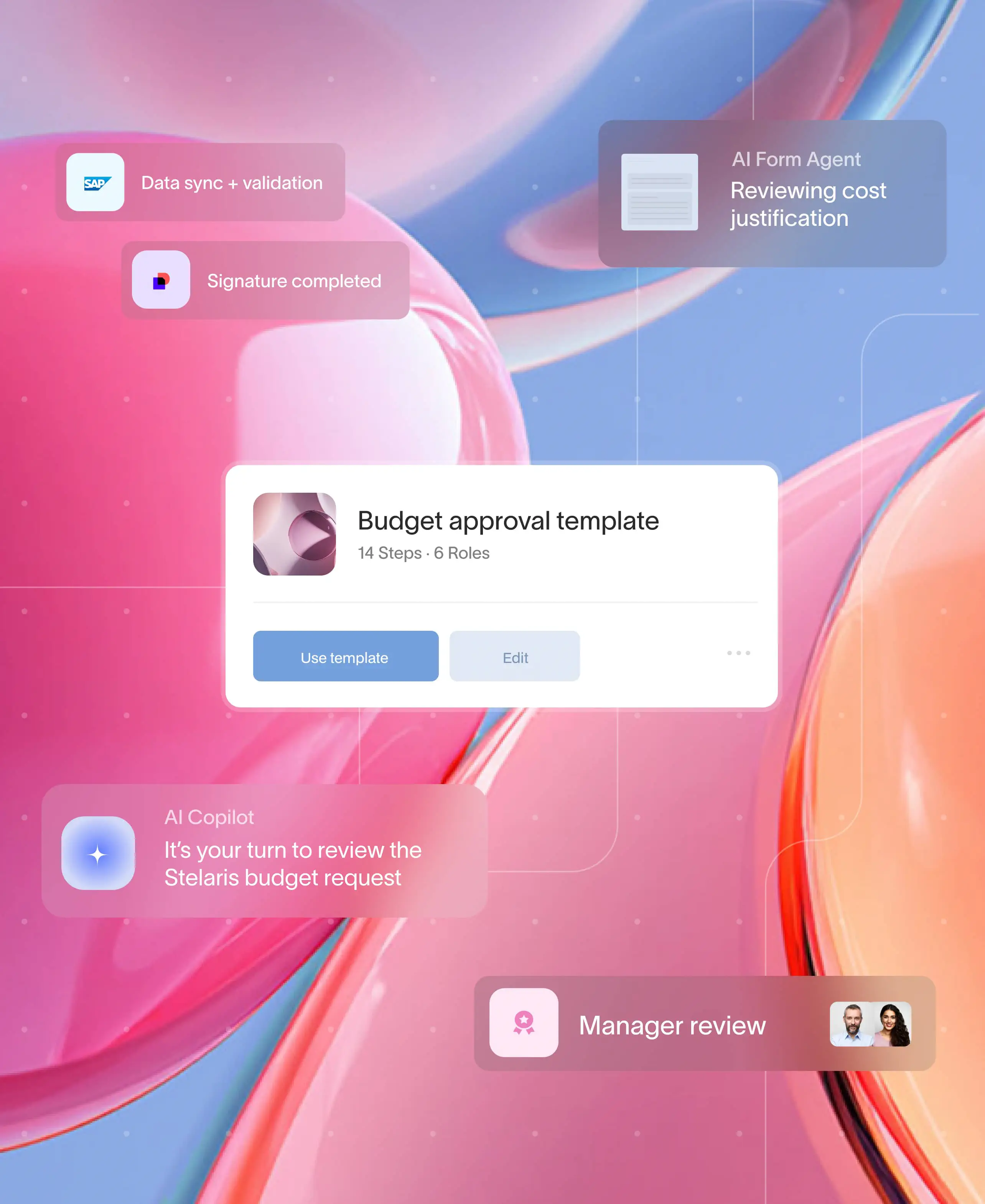

Moxo's AI-driven workflow automation is built around this principle. AI agents handle the heavy lifting while humans stay in control, reviewing key decisions and ensuring governance and auditability.

See human in the loop AI governance in action

The "black box" risk in agentic AI

When internal decision logic isn't transparent, you can't explain why a particular action was taken. That's a problem that compounds across multi-step workflows.

An autonomous agent makes a decision deep in a process flow. That decision triggers downstream actions across three departments. Two weeks later, someone asks why a customer commitment was made that violates policy, and the answer is: "The AI did it, and we're not sure exactly how it got there."

Unanticipated outcomes surface late, often after damage is done. An error in step three might not become visible until step twelve, by which point the blast radius has expanded considerably.

Compliance becomes guesswork. Regulators increasingly expect human oversight in high-risk AI systems. If your audit trail is "the model decided," you're going to have a difficult conversation.

Operational uncertainty compounds. Without controlled checkpoints, teams lose the ability to explain, correct, or halt autonomous decisions before they cascade.

Moxo addresses this directly through traceable approvals and audit trails. Every workflow action is tracked and logged, ensuring accountability, governance, and compliance.

"We moved from five different systems to one Moxo portal, and now our board reviews are backed by data we can trust." —-G2 reviewer

Designing effective HITL checkpoints

The goal isn't human review of everything. It's human accountability where it matters.

Poorly designed HITL becomes a bottleneck. Well-designed HITL becomes a governance feature that enables faster, more confident execution. The difference is intentionality.

Identify high-risk decision nodes. Not every step requires human review. Focus HITL on moments where errors are costly: compliance decisions, customer commitments, financial approvals, exceptions that fall outside policy. Define risk thresholds that automatically trigger human review when crossed.

Balance autonomy and oversight. Guardrails should empower agents to act on predictable tasks while humans intervene on exceptions and complex judgments. If every step requires approval, you've recreated the manual process with extra steps.

Integrate explainability tools. Tools that surface AI reasoning help reviewers understand why an agent recommended an action. A human reviewing an AI recommendation without context is just rubber-stamping; a human reviewing with full reasoning can actually exercise judgment.

Establish feedback loops. Human overrides shouldn't be an exception. They should be training data. Design processes where human corrections inform future agent behavior, creating continuous improvement.

Moxo's workflow builder lets operations teams configure exactly which steps require explicit human review. Automated escalation triggers notify reviewers when agents encounter ambiguous outcomes, confidence below thresholds, or compliance rule hits.

HITL for workflow safety and ethical alignment

In operationally critical workflows, HITL protects organizations from unsafe, biased, or unexplainable decisions.

Humans bring context, ethics, and judgment that no autonomous system can fully replicate. That separation isn’t a limitation of AI, it’s how accountability and governance are preserved in real operations.

Fairness and bias mitigation. Human reviewers can catch decisions influenced by biased training data or edge cases the model handles poorly. An agent might process a thousand applications correctly and systematically disadvantage the thousand-and-first.

Liability management. Clear human checkpoints tie accountability to individuals or roles. When something goes wrong, you need to answer: who reviewed this, what information did they have, and what decision did they make?

Audit readiness. Documentation of human approvals and overrides creates traceable evidence for internal and external audits. The compliance officer asks for an audit trail and you can actually provide one.

How Moxo enables auditable HITL governance

Moxo's approach to HITL focuses on structured handoffs where human judgment and AI coordination meet with full traceability.

The platform separates what AI should handle (preparation, validation, routing, monitoring) from what humans must own (decisions, approvals, exceptions).

Defined decision boundaries. Operations teams configure which steps require explicit human review, whether for compliance, accuracy, or risk reasons. The workflow knows where autonomy ends and judgment begins.

Traceable approvals. Every HITL checkpoint includes contextual records: who reviewed, what decision was made, why exceptions were triggered. This audit trail turns "we have AI governance" from a claim into a demonstrable fact.

Automated escalation triggers. When agents encounter ambiguous outcomes or compliance rule hits, human reviewers are instantly notified. Escalation isn't manual follow-up. It's built into the process architecture.

Here's what this looks like in practice. A process triggers when an agentic workflow encounters a decision exceeding defined risk parameters. An AI agent reviews context, flags the exception, and prepares the approval request with relevant details.

The workflow routes to the appropriate reviewer, notifying each only when their judgment is required. The reviewer sees full context, makes the call, and approves or escalates. The process moves forward without side emails or "just checking in" messages. Everyone sees exactly where it stands and who decided what.

Learn more about Moxo's AI workflow automation and how it keeps humans in control while AI handles the heavy lifting.

Best practices for HITL governance in agentic workflows

Sustainable HITL governance requires clear policies, continuous monitoring, and teams equipped to work alongside AI.

Policy and role clarity. Define who is responsible for what. Ambiguity about roles creates gaps that autonomous systems will happily exploit.

Continuous monitoring. Agent performance drifts. Regularly review how often human intervention is triggered, what types of exceptions occur, and whether governance policies need adjustment.

Framework alignment. Align HITL checkpoints with enterprise risk frameworks (NIST, ISO) and internal audit schedules. Governance that exists outside your broader compliance structure is governance that will eventually be ignored.

Training for human-AI collaboration. Equip staff to work effectively with AI systems, understanding AI reasoning, recognizing common failure modes, and knowing when to override versus when to trust.

Moxo supports this through operational dashboards that provide real-time visibility into where work stands, what's blocked, and what's moving, so teams can intervene before cycle times slip or SLAs are missed.

Ensuring control and safety in your agentic AI systems

As agentic AI systems become more capable and autonomous, trust, safety, and compliance cannot be assumed. They must be engineered.

Human-in-the-loop governance isn't a concession to caution. It's a practical framework that embeds human judgment into autonomous workflows without sacrificing the efficiency gains that make agentic AI valuable. The organizations that scale AI successfully will be the ones that design accountability into their systems from the start.

AI handles coordination. Humans handle judgment. That's not a compromise. That's the model.

Ready to implement HITL governance in your agentic workflows? Get started with Moxo to build auditable checkpoints, traceable approvals, and compliant AI operations.

FAQs on human-in-the-loop AI governance

What exactly is HITL AI governance?

Human-in-the-loop AI governance embeds human oversight into AI decision and workflow processes, ensuring that critical decisions are reviewed or approved by people before final action. It's not about slowing AI down. It's about maintaining accountability where it matters.

Why does agentic AI specifically need HITL?

Agentic AI systems act autonomously across multiple steps, which increases the risk of opaque or unintended actions compounding before anyone notices. HITL ensures accountability, safety, and ethical alignment by creating explicit intervention points in autonomous workflows.

Won't HITL checkpoints slow down automation?

Only if they're poorly designed. Effective HITL governance balances human oversight with automation efficiency by triggering intervention only where decisions carry real risk. The goal is bounded autonomy, not blanket review.

How do you design HITL checkpoints that actually work?

Identify high-risk decisions where errors are costly. Incorporate explainability tools so reviewers understand AI reasoning. Build feedback loops where human corrections improve future agent behavior. Focus human judgment on exceptions, not routine execution.

Is HITL legally required for AI systems?

In many jurisdictions, human oversight in high-risk AI systems is mandated or strongly recommended. The EU AI Act's Article 14 explicitly requires it for certain applications. Even where not legally required, HITL is increasingly expected as a governance best practice.