Most teams evaluating agentic AI frameworks are asking the wrong question.

They compare features: "Does it support multi-agent?" "Can it integrate with our tools?" "Is it open source?" These matter, but they miss the architectural reality that determines whether your AI deployment actually works in production.

The right question is: How does this framework's architecture affect performance, scalability, and the ability to keep humans accountable for decisions?

Recent benchmarking data from AIMultiple makes this concrete. When researchers tested four leading frameworks across identical data analysis tasks, LangGraph consistently delivered the lowest latency while LangChain consumed the most tokens and time. Same tasks. Same dataset. Same LLM. Dramatically different results.

The difference isn't magic. It's architecture. And understanding that architecture is what separates teams that scale agentic AI from teams stuck in pilot mode.

Key takeaways

Framework architecture determines real-world performance. LangGraph's graph-based approach minimizes LLM involvement and delivers the fastest execution. LangChain's chain-first design introduces overhead that compounds across complex workflows.

Multi-agent orchestration isn't universal. CrewAI and LangGraph handle it natively. LangChain requires manual implementation. OpenAI Swarm keeps it lightweight but limited.

Memory and state management separate prototypes from production. Frameworks range from fully stateless (Swarm) to layered persistent memory (CrewAI). Your workflow requirements should drive this choice.

Human-in-the-loop support varies significantly. Some frameworks build in breakpoints and approval mechanisms. Others require custom implementation, adding development time and risk.

What agentic AI frameworks actually do

An agentic AI framework handles the infrastructure between your LLM and real-world actions. At a basic level, it structures prompts so the model responds predictably and routes responses to the right tool, API, or database.

Without a framework, you'd manually define every prompt, extract which tool the LLM wants to use, and trigger the corresponding API call. Frameworks streamline this through prompt orchestration, tool integration, memory management, RAG integration, and multi-agent coordination.

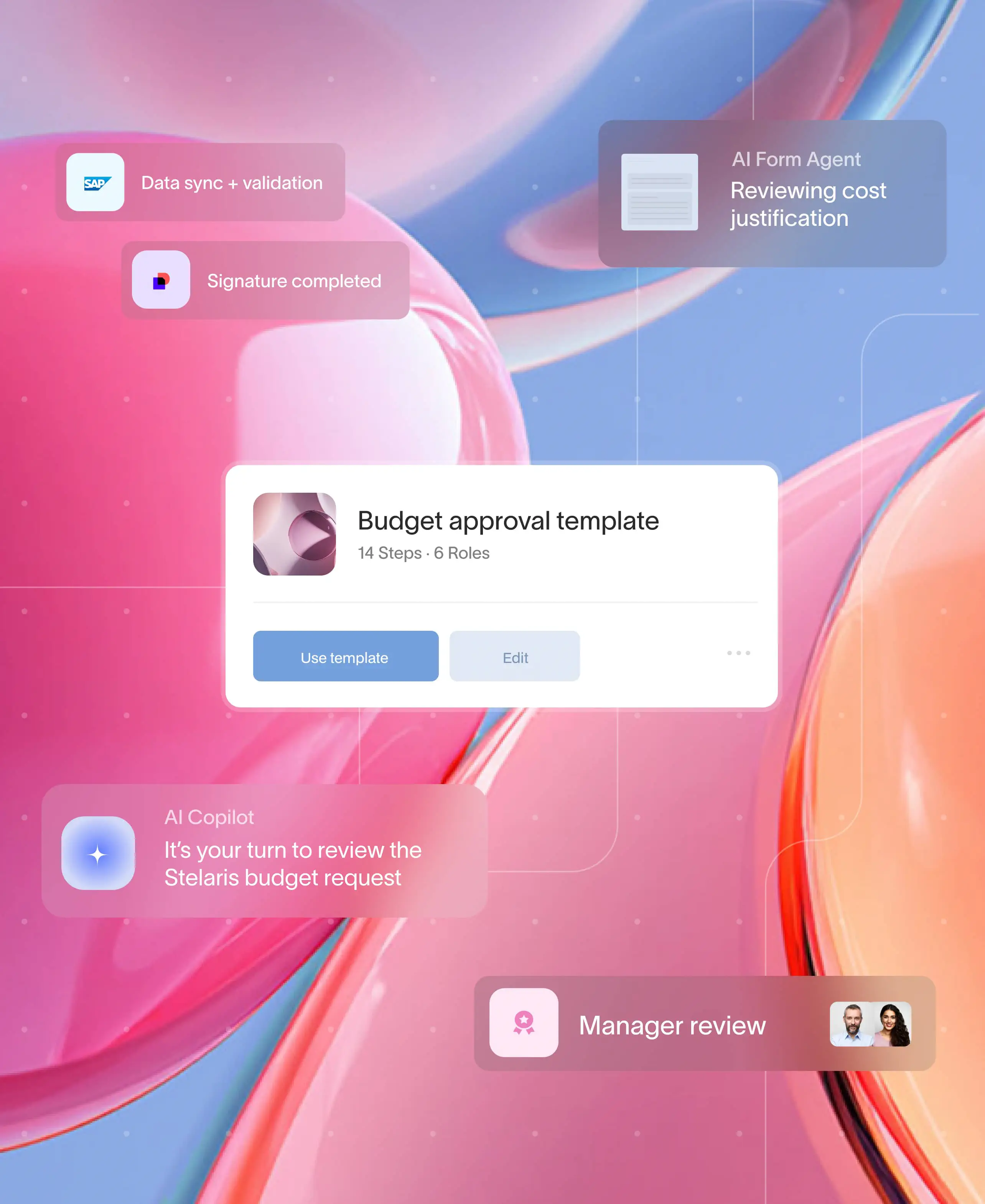

These capabilities distinguish agentic frameworks from basic LLM wrappers. But frameworks solve agent intelligence. They're less equipped for coordinating external stakeholders, enforcing compliance, and maintaining accountability across multi-party workflows. That's where process orchestration platforms like Moxo add value, sitting above the agent framework to handle the human coordination layer.

Agentic AI frameworks benchmark: Performance comparison

AIMultiple's benchmark tested CrewAI, LangChain, OpenAI Swarm, and LangGraph by implementing four data analysis tasks across each framework. Each task ran 100 times to measure consistency under realistic workloads.

LangGraph outperformed consistently. LangChain lagged significantly on both latency and token consumption.

According to PwC's 2025 AI Agent Survey, 79% of organizations have adopted AI agents, but broad adoption doesn't always mean deep impact. Many deployments stall because the framework can't handle production requirements.

For workflows requiring external stakeholder coordination, Moxo's workflow orchestration capabilities bridge the gap between agent intelligence and operational execution.

Why frameworks perform differently: Architecture matters

The benchmark results trace directly to architectural decisions.

LangGraph's efficiency. LangGraph defines tasks as a directed acyclic graph (DAG) where the tool executed at each step is predetermined. The LLM only gets involved at ambiguous decision points. This minimizes token consumption and execution time.

CrewAI's native multi-agent design. CrewAI was built from the ground up for multi-agent systems. Task delegation, inter-agent communication, and state management happen centrally at the framework level. Tools connect directly to agents with minimal middleware.

LangChain's chain-first limitation. LangChain was designed for single-agent, chain-based workflows. Multi-agent support was added later. Each step requires the LLM to interpret natural language input and select which tool to use, compounding latency across complex workflows.

Swarm's efficiency trade-off. OpenAI Swarm distributes tasks among specialized agents with tools as native Python functions. This delivers low latency but sacrifices built-in state management.

Even with the right framework, Gartner projects that by the end of 2026, 40% of enterprise applications will include task-specific AI agents. Scaling requires more than agent intelligence.

Moxo addresses this by embedding AI agents inside workflows, handling validation, routing, and follow-ups while humans retain accountability for decisions.

Comparing multi-agent orchestration capabilities

Multi-agent orchestration coordinates multiple specialized AI agents to tackle workflows exceeding single-agent capabilities.

LangGraph models agents as nodes within a graph structure, each maintaining its own state with conditional logic connections.

CrewAI assigns roles (Researcher, Developer) with specific tools, handling orchestration logic natively.

AutoGen enables asynchronous message-passing between agents for research and prototyping.

OpenAI Swarm uses lightweight routine-based handoffs.

LangChain operates through single-agent patterns with extensions for multi-agent setups.

For enterprise workflows involving external parties, orchestration must extend beyond agents to people.

Moxo's approval workflow capabilities route decisions to the right stakeholders at the right moment, ensuring AI handles coordination while humans handle judgment.

Memory and state management

Memory capabilities determine whether agents handle isolated tasks or persistent, context-aware workflows.

LangGraph provides in-thread and cross-thread memory with configurable persistence.

CrewAI offers layered memory out of the box: ChromaDB for short-term, SQLite for task results, vector embeddings for entities.

AutoGen maintains session context without built-in persistence.

OpenAI Swarm is stateless by design.

LangChain supports customizable memory through built-in classes.

According to McKinsey's 2025 State of AI, only 23% of organizations are scaling agentic AI systems, with most stuck in experimentation. State management is often the blocker.

Moxo complements framework memory with operational visibility across entire workflows, tracking where work stands, what's blocked, and what's moving forward.

Human-in-the-loop: Where humans stay accountable

For regulated industries and high-stakes workflows, human oversight isn't optional.

LangGraph supports custom breakpoints using interrupt_before to pause execution.

CrewAI enables feedback collection with human_input=True.

AutoGen natively supports human agents through UserProxyAgent.

OpenAI Swarm offers no built-in HITL.

LangChain allows custom breakpoints within chains.

Moxo takes human-in-the-loop further by orchestrating external stakeholder participation. AI agents handle preparation and validation. Humans handle approvals and exceptions. The process moves forward without manual chasing.

Framework selection: Matching architecture to use case

Choose LangGraph for complex workflows requiring low latency and explicit execution visibility.

Choose CrewAI for production multi-agent systems with role-based delegation.

Choose AutoGen for research and prototyping with flexible agent behavior.

Choose OpenAI Swarm for lightweight experiments.

Choose LangChain for single-agent RAG applications.

For workflows crossing organizational boundaries, pair your framework with Moxo's process orchestration. AI agents handle the coordination work. Humans handle decisions that carry accountability.

Turning Agentic AI frameworks into production systems

Framework selection shapes performance, scalability, and operational reliability. LangGraph delivers lowest latency. CrewAI provides production-ready multi-agent orchestration. LangChain works best for single-agent RAG despite higher overhead.

But framework capability alone doesn't guarantee enterprise success. Organizations scaling agentic AI pair agent frameworks with orchestration infrastructure that keeps humans accountable for decisions that matter. Start with workflow requirements. Match architecture to use case. Build the coordination layer connecting agent intelligence to human judgment.

Explore how Moxo orchestrates human + AI workflows

FAQs

What is an agentic AI framework?

An agentic AI framework provides infrastructure for building AI agents that reason, plan, and execute multi-step workflows autonomously. It handles prompt orchestration, tool integration, memory management, and coordination between multiple agents.

How do agentic AI frameworks differ from RPA?

RPA executes predefined scripts without decision-making. Agentic AI frameworks support reasoning and adaptation. Agents interpret context, handle exceptions, and coordinate with other agents or humans based on workflow state.

Which framework has the best performance?

According to AIMultiple's benchmark, LangGraph delivered lowest latency and token consumption. LangChain had the highest overhead due to LLM interpretation at each step.

Do I need a framework to deploy agentic AI in production?

For anything beyond basic interactions, yes. Production requires orchestration, state management, and governance controls. Frameworks provide this infrastructure. For external stakeholder workflows, platforms like Moxo add the human coordination layer.

Can these frameworks handle external stakeholders?

Most frameworks are designed for internal development. Exposing workflows to clients or partners requires additional orchestration for secure access and compliance controls.