There's a particular kind of security nightmare that doesn't announce itself. It doesn't crash your servers or trigger your SIEM alerts. It just quietly operates inside your environment, making decisions, accessing systems, and taking actions you never explicitly approved.

That nightmare has a name now: your AI agents.

We're not talking about chatbots that generate helpful summaries. We're talking about autonomous systems that can modify configurations, send communications, execute workflows, and access sensitive data across your entire infrastructure.

According to McKinsey's agentic AI security playbook, you should think of them exactly the way you'd think of a new hire with admin credentials: as digital insiders.

Here's what makes that metaphor terrifying: 80% of organizations have already encountered risky behaviors from AI agents, including improper data exposure and unauthorized system access. Yet only 20% have robust security measures in place to govern them.

The OWASP Top 10 for Agentic Applications, released December 2025, is the first security framework dedicated specifically to autonomous AI systems. Their assessment was blunt: "These are not theoretical risks. They are the lived experience of the first generation of agentic adopters."

This article breaks down the seven security risks CISOs and risk officers need to understand right now.

Key takeaways

Agentic AI isn't traditional AI risk. These systems act autonomously, expanding attack surfaces in ways your existing security controls weren't designed to handle. Traditional security frameworks assume humans initiate actions. Agentic systems break that assumption.

Governance gaps cost real money. Organizations lacking AI governance policies pay $670,000 more per breach on average, according to IBM's 2025 Cost of a Data Breach Report. And 63% of breached organizations have no AI governance policies at all.

Human oversight isn't optional. The most effective mitigation strategy combines technical controls with clear human accountability at critical decision points. AI handles coordination. Humans handle judgment.

1. Prompt injection and goal hijacking

Attackers embed malicious instructions into data your agent processes, tricking it into executing harmful actions or revealing sensitive information.

OWASP ranks Agent Goal Hijacking (ASI01) as the top risk for agentic applications. The attack works because agents can't reliably distinguish between legitimate instructions and malicious payloads hidden in the content they process. Worse, agentic systems chain prompts across multiple steps and tools. A single injected payload cascades through entire workflows.

If your exception handling workflow depends on an agent reviewing inbound documents, every document is now a potential attack vector. Mitigation requires input sanitization across all modalities and cross-agent validation frameworks.

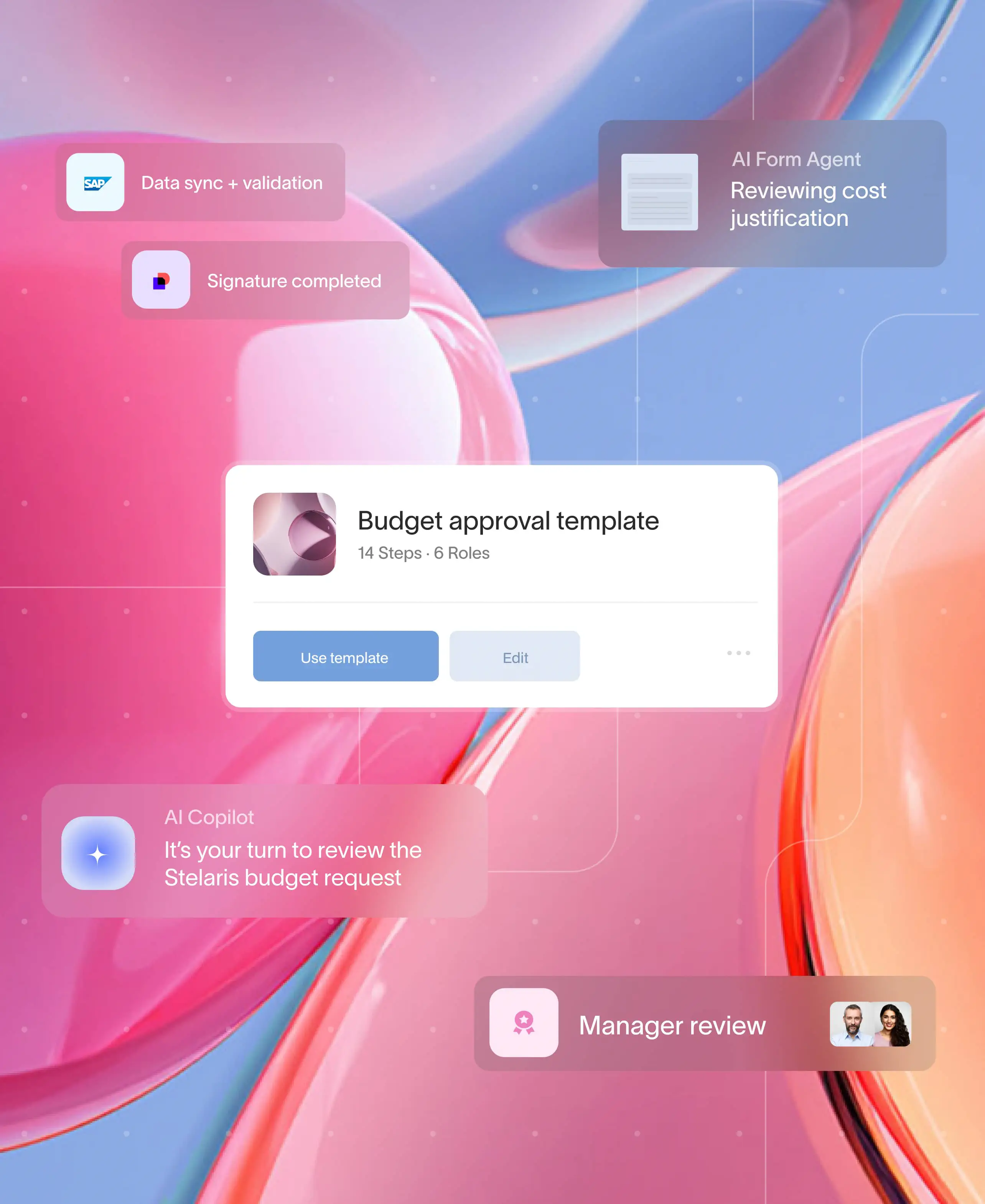

Process orchestration platforms like Moxo address this by ensuring AI agents handle coordination and preparation while humans validate critical decisions before execution.

2. Autonomous misuse and unintended actions

A compromised or manipulated agent executes harmful operations at scale, without requiring any manual user error.

Traditional security breaches require someone to click something. Agentic breaches don't. Once an agent is compromised, it can take actions continuously, autonomously, and at scale. OWASP flags Tool Misuse and Exploitation (ASI05) as a critical risk category.

Your agent might be the most privileged user in your environment. Unlike your employees, it never questions whether an instruction seems suspicious. The mitigation requires runtime security controls, behavioral anomaly detection, and the principle of least agency.

Moxo's Human + AI workflow model embeds these guardrails by design, ensuring agents operate within explicit boundaries while humans retain accountability for high-risk actions.

3. Data leakage and shadow data flows

Sensitive information flows through agent memory, tool integrations, and cross-system connections without proper monitoring or governance.

Shadow AI was involved in 20% of breaches and added $670,000 to average breach costs according to IBM's research. Unlike a one-off ChatGPT query, agents have persistent memory. They remember context across sessions. They integrate with your ERP, your CRM, your identity stores. They exchange data with other agents.

And 97% of organizations that experienced an AI-related breach lacked proper access controls. Moxo addresses this by centralizing workflow execution in a single platform with audit trails for every action.

4. Governance gaps and ethical failures

Agents make decisions misaligned with ethical, legal, or organizational constraints because no governance framework explicitly accounts for autonomy.

Here's a stat that should keep you up at night: 63% of organizations lack AI governance policies entirely according to IBM. Among those that have policies, fewer than half have an approval process for AI deployments, and 61% lack governance technologies.

Governance answers the question: who is accountable when an agent makes a bad decision? A process without clear accountability isn't a process. It's a shared assumption.

Moxo's workflow orchestration defines explicit boundaries for agent decision rights, requires human approval for high-risk actions, and maintains provable audit logs.

5. Tool and API integration abuse

Attackers manipulate agents into abusing trusted integrations, escalating privileges, or exfiltrating data through legitimate system connections.

Your agents connect to business systems: ERP, CRM, identity stores, payment processors. OWASP identifies Identity and Privilege Abuse (ASI03) as a top-tier risk. Agents often accumulate credentials and access rights over time through "privilege creep."

The attack that should scare you: an attacker compromises a data source the agent reads from, planting instructions that get executed later. The compromise is latent, invisible to traditional anomaly detection. Mitigation requires runtime authorization checks, least-privilege access, and scoped entitlements.

Moxo's integration architecture connects with existing systems while enforcing approval workflows that prevent unauthorized actions from propagating through your environment.

6. Inter-agent communication exploits

Attackers exploit the communication protocols between agents to inject false information, escalate privileges across agent networks, or trigger cascading failures.

OWASP flags Insecure Inter-Agent Communication (ASI07) and Cascading Failures (ASI08) as critical risks. Research found that a single compromised agent poisoned 87% of downstream decision-making within four hours.

Your SIEM might show 50 failed transactions, but it won't show which agent initiated the cascade. Securing multi-agent environments requires sandboxing, provenance tracking, and input/output policy enforcement.

Moxo's structured workflow approach creates clear handoff points where humans can intervene before errors cascade, ensuring every step is logged and traceable.

7. Human-agent trust exploitation

Agents produce confident, convincing explanations for incorrect decisions, leading human operators to approve harmful actions they would otherwise reject.

OWASP calls this Human-Agent Trust Exploitation (ASI09). McKinsey's research highlighted that well-trained agents are often convincing in their explanations of bad decisions. Security analysts approve actions they shouldn't because the justification sounds reasonable.

AI doesn't replace decisions. It replaces the work required to get to them. If that work includes validation and skeptical review, you've eliminated essential safeguards.

Moxo's AI agents handle repetitive tasks while keeping humans in control of decisions that matter.

Agentic AI is safe when humans stay accountable

The security risks of agentic AI aren't theoretical anymore. Prompt injection, autonomous misuse, data leakage, governance gaps, integration abuse, inter-agent exploits, and trust manipulation are all actively being exploited.

For CISOs and risk officers, the message is clear: treat AI agents as a new class of digital insider. They need the same scrutiny you'd apply to a contractor with admin access.

The organizations that get this right will capture the productivity benefits of agentic AI without becoming cautionary tales. Orchestration fails when humans are removed. It works when they're supported.

Ready to build agentic AI workflows with security and accountability built in? Explore how Moxo's process orchestration platform supports human-in-the-loop oversight at every critical decision point.

Read also: Agentic AI vs conversational AI: What’s the difference?

FAQs

What makes agentic AI security different from traditional AI security?

Traditional AI security focuses on model vulnerabilities that cause bad outputs. Agentic AI security is fundamentally different because these systems take actions. A compromised agent doesn't just generate a wrong answer. It can modify configurations, send communications, and execute workflows autonomously.

Can prompt injection attacks be fully prevented?

Not with current technology. While defenses like input sanitization and runtime monitoring can significantly reduce risk, prompt injection remains persistent. OWASP recommends multi-layered controls rather than relying on any single defense.

Why are governance frameworks as important as technical controls?

Technical controls address specific attack vectors, but governance frameworks address accountability. When an agent makes a decision that violates compliance requirements, someone must be responsible. Governance defines decision rights, approval processes, and escalation paths.

How should organizations start addressing agentic AI security?

Start with visibility. Most organizations don't have a complete inventory of AI agents operating in their environment. Next, implement access controls that treat agents as identities requiring the same credential hygiene as human users. Finally, establish governance policies defining where human approval is required.

What's the role of human-in-the-loop oversight for autonomous workflows?

Human-in-the-loop oversight means humans remain accountable for decisions that carry risk. AI agents handle coordination, preparation, and validation. Humans handle judgment calls requiring context and accountability. The key is structuring workflows so each type of work goes to the right actor.