Automation promised to eliminate manual work. Instead, many businesses discovered a frustrating truth: fully automated systems break when reality gets messy.

McKinsey research shows that only about 30% of automation initiatives deliver expected results.

The gap?

Most systems cannot handle exceptions, edge cases, or ambiguous data without human intervention. They need what experts call human-in-the-loop automation.

This guide breaks down exactly what human-in-the-loop (HITL) automation means, why full automation fails in complex workflows, practical use cases where HITL adds measurable value, and how to design exception-handling workflows that actually work. You will also learn how platforms like Moxo enable organizations to build structured human oversight into their automated processes.

Key takeaways

Human-in-the-loop automation integrates human judgment with automated systems. HITL routes low-confidence or ambiguous tasks to human reviewers, then resumes automated processing once resolved. This prevents automation errors from cascading through workflows.

Full automation fails when exceptions are unpredictable. Machine errors such as OCR misreads, low-confidence classifications, and complex approval scenarios require human oversight. Ignoring these exceptions poses compliance risks and frustrates customers.

Managing automation exceptions requires structured workflows. Best practices include early detection through confidence thresholds, efficient handoff with full context preservation, and feedback loops that train systems to reduce repeat exceptions.

The right platform makes HITL scalable. Modern workflow orchestration tools streamline exception routing, preserve decision context, and log human interventions, improving both compliance and automation accuracy over time.

What is human-in-the-loop automation

Human-in-the-loop automation refers to systems that automatically identify tasks requiring human judgment and route them to the right person at the right time. Once a human resolves the exception, the system resumes automated processing without starting over. Think of it as automation with structured checkpoints where humans step in only when needed.

This approach differs fundamentally from both full automation and manual workflows. Full automation assumes machines can handle every scenario. Manual workflows assume humans must touch every task. HITL finds the middle ground: let automation handle routine work while humans focus on judgment calls.

The concept matters because real-world processes rarely follow perfect patterns.

A loan application might have unusual income documentation.

A vendor invoice might list ambiguous line items.

A customer onboarding form might include data that conflicts with verification systems.

In each case, pure automation either makes costly errors or simply stops working.

With HITL, organizations preserve automation speed for straightforward tasks while building guardrails for complexity. The result is higher accuracy, better compliance, and faster end-to-end cycle times because human attention goes only where it genuinely adds value.

For a deeper look at automation fundamentals, see Where to use automated approval workflows and where to avoid them.

Why automation alone fails

Businesses invest heavily in automation, expecting dramatic efficiency gains. Yet many discover that automated systems create new problems when they encounter scenarios outside their training data or rule sets. Understanding why automation fails helps organizations design smarter HITL interventions.

Machine errors compound quickly without oversight. OCR systems misread handwritten text, scanned documents with poor image quality, or unusual fonts. If an automated system processes a misread bank statement without verification, downstream calculations inherit that error. A single-digit mistake in an account number can misdirect funds or trigger compliance alerts.

Low-confidence classifications create hidden risk. AI models assign confidence scores to their predictions. When a document classification model is only 60% certain about a file type, proceeding automatically means accepting a 40% chance of misrouting. In industries like healthcare or financial services, misclassification can mean regulatory violations or patient safety issues.

Complex approvals resist rigid rule sets. Automated approval workflows work well for straightforward requests. But real business decisions involve exceptions: a client requests unusual payment terms, a vendor offers a discount contingent on timing, a partner agreement includes non-standard liability clauses. Rule-based automation cannot evaluate nuance. It either approves everything matching a pattern or rejects everything that does not, missing opportunities and frustrating stakeholders.

Ignoring exceptions creates operational debt. When exceptions pile up without resolution, they clog workflows. Teams develop workarounds like side email threads or spreadsheet tracking. These workarounds undermine the original automation investment by reintroducing manual coordination and eliminating audit visibility.

Yet automation alone cannot solve complex, nuanced processes where judgment calls matter - AI works best when paired with human decision-makers who can step in at critical moments, ensuring accuracy, compliance, and better outcomes at every stage.

Yet automation alone cannot solve complex, nuanced processes where judgment calls matter. AI works best when paired with human decision-makers who can step in at critical moments, ensuring accuracy, compliance, and better outcomes at every stage.

Moxo AI addresses these failure points by embedding structured human review directly into workflows. Instead of exceptions stalling in a queue or disappearing into email, Moxo routes flagged items to designated reviewers with full context attached.

Key Human In The Loop (HITL) use cases

Human in the loop automation delivers the greatest value in scenarios where automation confidence varies or where errors carry significant consequences. The following examples demonstrate where HITL adds measurable ROI.

OCR and document processing failures

Automated document processing accelerates intake dramatically, but no OCR system achieves 100% accuracy. Low-quality scans, handwritten notes, unusual formatting, and faded text all create recognition errors. When a system flags low-confidence text extraction, human reviewers step in to verify critical fields before downstream processing continues.

This prevents incorrect data from propagating through customer records, financial calculations, or compliance filings. The ROI lever is error reduction: catching a single misread account number can prevent hours of reconciliation work or regulatory penalties.

Complex multi-party approvals

Straightforward approvals like expense reports under a threshold work fine with rules. But business-critical approvals often involve conditional logic that automation cannot capture. A contract modification might require different reviewers depending on monetary value, geographic scope, or risk category. Human judgment evaluates context that rule engines miss.

Customer onboarding and compliance verification

KYC (Know Your Customer) and identity verification processes are high-stakes and regulation-intensive. Automated identity matching catches most cases, but mismatches between submitted documents and verification databases require human review.

A name spelling variation, an expired document, or an address discrepancy might be legitimate or might signal fraud. Human reviewers evaluate edge cases that automated systems flag.

AI classification and sentiment analysis errors

Organizations increasingly use AI to route customer inquiries, classify support tickets, or analyze feedback sentiment. But AI confidence varies.

A customer complaint phrased sarcastically might confuse sentiment models.

An inquiry touching multiple topics might receive an ambiguous classification. Routing low-confidence items to human review prevents misrouted tickets, inappropriate automated responses, or missed escalation triggers.

The ROI lever is customer experience: preventing a single high-value customer from receiving a tone-deaf automated response can preserve significant revenue.

How to manage automation exceptions

Designing effective HITL workflows requires more than just adding human review steps. Organizations need structured approaches that minimize friction while maximizing accuracy. The following principles guide successful exception management.

Detect and route exceptions early

The first step is identifying exceptions before they cause downstream problems. Automated systems should include confidence thresholds that trigger human review when scores fall below acceptable levels.

Anomaly detection rules can flag outliers like unusually large transactions, missing required fields, or data patterns that deviate from norms. Early detection prevents exceptions from compounding.

Moxo's workflow automation capabilities enable teams to configure triggers that route low-confidence items to designated reviewers automatically.

Preserve full context during handoff

When a task moves from automated processing to human review, the reviewer needs complete context to make good decisions quickly. This means including the original input data, system confidence scores, related historical records, and any automated processing already completed.

Without context, reviewers waste time reconstructing situations or make decisions without crucial information.

Moxo preserves complete task history and attaches all relevant documents to exception items, reducing back-and-forth and accelerating resolution.

Implement feedback loops for continuous improvement

Human decisions should train future automation. When a reviewer resolves an exception, that resolution becomes data for improving confidence thresholds, classification models, or rule sets. Over time, automation handles more cases accurately and flags fewer false positives.

This feedback loop is the difference between static automation that perpetually requires the same exceptions and adaptive systems that get smarter with use. Logging human decisions in audit trails creates the dataset needed for improvement.

Measure and optimize exception handling metrics

Teams should track exception frequency, resolution time, automation coverage percentage, and repeat exception rates. High exception frequency might indicate poorly calibrated thresholds or upstream data quality problems.

Slow resolution times might signal unclear routing or insufficient reviewer capacity. Repeat exceptions suggest feedback loops are not working. Continuous measurement enables continuous refinement.

Read more - Document approval workflow: Steps, roles, and templates for practical templates that incorporate exception paths and escalation policies.

Moxo's approach: Closing the HITL loop

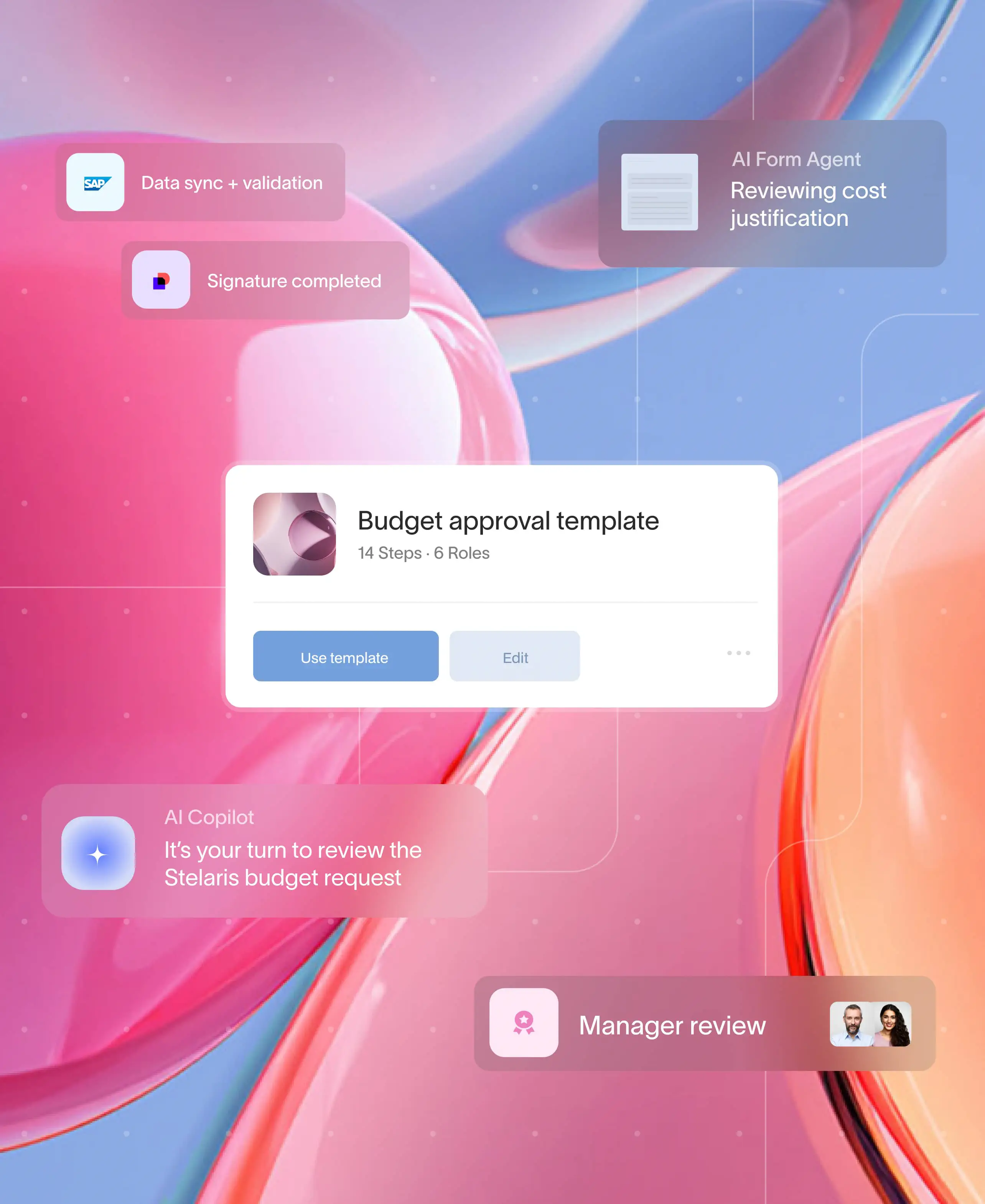

Moxo's platform operationalizes human in the loop automation by integrating exception handling directly into workflow orchestration. Rather than treating exceptions as disruptions, Moxo makes them structured steps within end-to-end processes.

Intelligent exception detection and context preservation. Moxo's workflow automation enables teams to configure confidence thresholds and anomaly triggers that flag items for human review. When an exception occurs, the platform preserves complete context: original submissions, system processing history, related documents, and communication threads. Reviewers see everything they need in one place.

Seamless handoff from system to human. Moxo's Agentic AI, including the AI Review Agent, AI Support Agent, and AI Preparer Agent, can handle initial triage on flagged tasks. When items require human judgment, the handoff is seamless. Assignees receive notifications with direct links to review queues. Automated reminders keep exceptions moving rather than stalling in backlogs.

Structured approvals for complex scenarios. The Approvals Engine routes complex decisions through configurable multi-step approval chains. Role-based permissions ensure sensitive items reach appropriate reviewers. Audit trails capture every approval action for compliance documentation.

Feedback that improves automation. Every human decision logged in Moxo becomes part of the system record. Teams can analyze exception patterns to refine triggers, adjust thresholds, and train AI agents on edge cases. This closes the loop between human judgment and automated processing.

For workflow templates designed around client onboarding and exception handling, explore Moxo Customer Onboarding Software and Moxo Customer Success Workflow.

Conclusion

Human in the loop automation represents the practical reality of modern process design. Full automation sounds appealing until edge cases, ambiguous data, and complex decisions expose its limits. HITL builds structured human oversight into automated systems, catching errors early, preserving compliance, and ensuring exceptions do not derail efficiency gains.

Organizations that invest in well-designed exception handling see higher accuracy, faster cycle times, and sustainable automation ROI.

Moxo enables teams to implement human-in-the-loop automation at scale. Workflow automation routes exceptions to the right reviewers with full context. Agentic AI handles initial triage while preserving human judgment for genuine edge cases. Audit trails capture every decision for compliance and continuous improvement.

For organizations managing high-stakes workflows like client onboarding, document verification, or multi-party approvals, Moxo transforms exception handling from a bottleneck into a competitive advantage.

Get started with Moxo to streamline your workflows with intelligent human oversight.

FAQs

What is human in the loop automation?

Human in the loop automation refers to systems designed to automatically route low-confidence or ambiguous tasks to human reviewers while continuing automated processing for straightforward items. Once humans resolve exceptions, the system resumes automation. This approach combines machine efficiency with human judgment for higher accuracy.

How does HITL help with managing automation exceptions?

HITL prevents exceptions from stalling or causing downstream errors. By detecting low-confidence items early and routing them to qualified reviewers with full context, organizations resolve issues faster. Structured exception handling also creates feedback loops that improve automation accuracy over time.

What are some human in the loop automation examples?

Common examples include OCR verification, where humans correct misread documents, complex approval workflows where conditional logic requires judgment, KYC and identity verification where mismatches need human evaluation, and AI classification review where low-confidence sentiment or category predictions receive human oversight.

When should HITL be used instead of full automation?

Use HITL when automation confidence varies significantly, when errors carry high consequences like compliance violations or customer impact, when decisions involve nuance that rule-based systems cannot capture, or when regulatory requirements mandate human review for certain transaction types. To explore approval-specific workflows with built-in exception handling, review Approval workflow: What is it, benefits, and examples and the 5 best approval workflow software tools.

Can human decisions improve automation accuracy over time?

Yes. Logging human decisions creates training data for refining confidence thresholds, adjusting classification models, and improving rule sets. Organizations that implement feedback loops between human review and automated systems see steadily increasing automation coverage with decreasing exception rates.

How does Moxo handle exception handoffs between system and humans?

Moxo preserves complete context when routing exceptions to human reviewers. This includes original submissions, processing history, related documents, and communication threads. Automated notifications alert assignees, while reminders prevent exceptions from stalling in queues.

What metrics should I track to measure HITL success?

Key metrics include exception frequency as a percentage of total volume, average resolution time, automation coverage percentage, repeat exception rate, and reviewer capacity utilization. Tracking these metrics enables continuous optimization of thresholds, routing rules, and feedback loops.